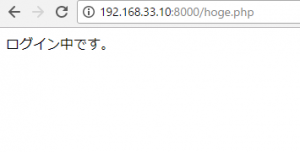

セッションに値がある場合は、コンテンツを表示させ、それ以外は、ログインページにリダイレクトさせる。

session_start();

if(isset($_SESSION["username"])){

echo "ログイン中です。";

} else {

header('Location: login.php');

}

ふむふむ

session destroy もしくは nullの場合はログインページにリダイレクト

ログイン・サインインの基本機能はできたかな?

随机应变 ABCD: Always Be Coding and … : хороший

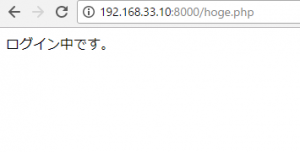

セッションに値がある場合は、コンテンツを表示させ、それ以外は、ログインページにリダイレクトさせる。

session_start();

if(isset($_SESSION["username"])){

echo "ログイン中です。";

} else {

header('Location: login.php');

}

ふむふむ

session destroy もしくは nullの場合はログインページにリダイレクト

ログイン・サインインの基本機能はできたかな?

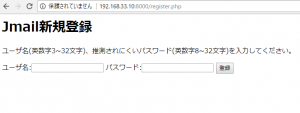

id 英数字3~32文字、パスワード英数字8~32文字のバリデーション

<?php

require_once("auth.php");

$mysqli = connect_mysql();

$status = "none";

if(!empty($_POST["username"]) && !empty($_POST["password"])){

if(!preg_match('/^[0-9a-zA-Z]{3,32}$/', $_POST["username"]))

$status = "error_username";

//パスワードのチェック

else if(!preg_match('/^[0-9a-zA-Z]{8,32}$/', $_POST["password"]))

$status = "error_password";

else{

$password = password_hash($_POST["password"], PASSWORD_DEFAULT);

$stmt = $mysqli->prepare("INSERT INTO users VALUES (?, ?)");

$stmt->bind_param('ss', $_POST["username"], $password);

if($stmt->execute())

$status = "ok";

else

$status = "failed";

}

}

?>

<head>

<script src="http://code.jquery.com/jquery-2.0.0.min.js"></script>

<script src="register_check.js"></script>

</head>

<h1>Jmail新規登録</h1>

<?php if($status == "ok"):?>

<p>登録完了</p>

<?php elseif($status == "failed"): ?>

<p>エラー:既に存在するユーザ名です。</p>

<?php elseif($status == "none"): ?>

<p>ユーザ名(英数字3~32文字)、推測されにくいパスワード(英数字8~32文字)を入力してください。</p>

<form method="POST" action="">

ユーザ名:<input type="text" name="username">

パスワード:<input type="password" name="password">

<input type="submit" value="登録">

</form>

<?php else: ?>

<p>hogehoge</p>

<?php endif; ?>

register_check.js

$(function(){

$("form").submit(function(){

if(!$("input[name=username]").val().match(/^[0-9a-zA-Z]{3,32}$/)

|| !$("input[name=password]").val().match(/^[0-9a-zA-Z]{8,32}$/)){

alert("入力エラー");

return false;

}

return true;

});

});

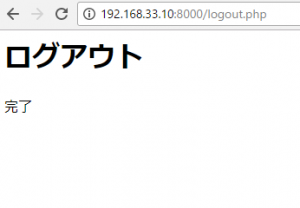

<?php session_start(); $_SESSION = array(); session_destroy(); ?> <h1>ログアウト</h1> <p>完了<p>

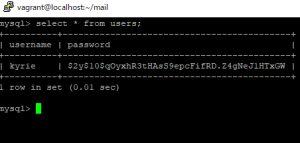

ログイン機能をつくっていた際に、何故かpassword_verifyが上手くいかなかったが、

create tableする際に、passwordの桁数が少なかったのが原因。

passwordを255にして

create table mail.users( username varchar(41) unique, password varchar(255) )

再度password_hashしたら、上手く機能しました。

<?php

session_start();

$mysqli = new mysqli('localhost', 'hoge', 'hogehoge', 'mail');

$status = "none";

if(isset($_SESSION["username"]))

$status = "logged_in";

else if(!empty($_POST["username"]) && !empty($_POST["password"])){

$stmt = $mysqli->prepare("SELECT password FROM users WHERE username = ?");

$stmt->bind_param('s', $_POST["username"]);

$stmt->execute();

$stmt->store_result();

if($stmt->num_rows == 1){

$stmt->bind_result($pass);

while ($stmt->fetch()) {

if(password_verify($_POST["password"], $pass)){

$status = "ok";

$_SESSION["username"] = $_POST["username"];

break;

}else{

$status = "failed";

break;

}

}

}else

$status = "failed";

}

?>

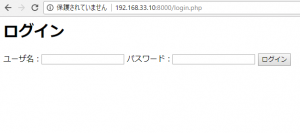

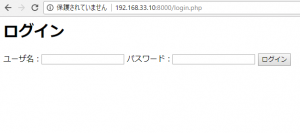

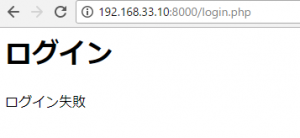

<h1>ログイン</h1>

<?php if($status == "logged_in"): ?>

<p>ログイン済み</p>

<?php elseif($status == "ok"): ?>

<p>ログイン成功</p>

<?php elseif($status == "failed"): ?>

<p>ログイン失敗</p>

<?php else: ?>

<form method="POST" action="login.php">

ユーザ名:<input type="text" name="username" />

パスワード:<input type="password" name="password" />

<input type="submit" value="ログイン" />

</form>

<?php endif; ?>

db table

create table mail.users( username varchar(41) unique, password varchar(41) )

register.php

<?php

$mysqli = new mysqli('localhost', 'hoge', 'hogehoge', 'mail');

$status = "none";

if(!empty($_POST["username"]) && !empty($_POST["password"])){

$password = password_hash($_POST["password"], PASSWORD_DEFAULT);

$stmt = $mysqli->prepare("INSERT INTO users VALUES (?, ?)");

$stmt->bind_param('ss', $_POST["username"], $password);

if($stmt->execute())

$status = "ok";

else

$status = "failed";

}

?>

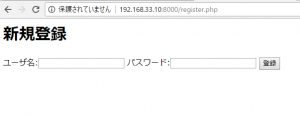

<h1>新規登録</h1>

<?php if($status == "ok"):?>

<p>登録完了</p>

<?php elseif($status == "failed"): ?>

<p>エラー:既に存在するユーザ名です。</p>

<?php else: ?>

<form method="POST" action="">

ユーザ名:<input type="text" name="username">

パスワード:<input type="password" name="password">

<input type="submit" value="登録">

</form>

<?php endif; ?>

db

なるほど。

まずは簡易的な設計にしたいので、

Mysql側のテーブルは、(1)account、(2)send、(3)receive の3つぐらいでしょうか。

sendのテーブルとtoで指定したaddressのreceiveテーブルに、メール文を保存して、

送信者・受信者がお互いに見れるようにする。受信者は、メールを見たら、alreadyreadのフラグを立てる。

create table mail.account(

id int unsigned auto_increment primary key,

address varchar(255),

passwords varchar(255),

name varchar(255)

);

create table mail.send( id int unsigned auto_increment primary key, accountid varchar(255), to varchar(255), subject varchar(255), body varchar(255), file1 varchar(255), file2 varchar(255), alreadyread int, sendtime datetime default null );

create table mail.receive( id int unsigned auto_increment primary key, accountid varchar(255), subject varchar(255), body varchar(255), file1 varchar(255), file2 varchar(255), to varchar(255), from varchar(255), alreadyread int, junk int, delete int, receivetime datetime default null );

ああああ、singin・loginフォーム作らないと駄目だ。。

投信の会社などは、メールフォームがないのがトレンドのようですが、

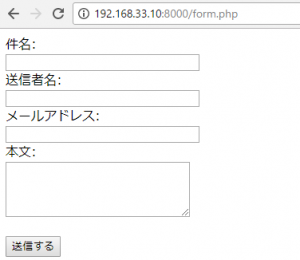

さて、mb_send_mailを使います。vagrant postfixで設定している為、fromの挙動が異なります。

form.php

<title>Form</title> <body> <form action="send.php" method="post"> 件名:<br> <input type="text" name="subject" size="30" value=""/><br> 送信者名:<br> <input type="text" name="name" size="30" value=""/><br> メールアドレス:<br> <input type="text" name="mail" size="30" value=""/><br> 本文:<br> <textarea name="message" cols="30" rows="5"></textarea><br> <br> <input type="submit" value="送信する"/> </form> </body>

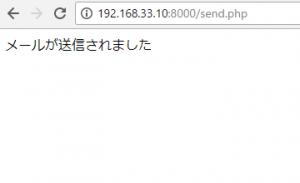

send.php

<?php

$message = "名前:" .htmlspecialchars($_POST["name"])."\n本文:".htmlspecialchars($_POST["message"]);

if(!mb_send_mail("hoge@gmail.com", $_POST["subject"], $message, "From:".$_POST["mail"])){

exit("error");

}

?>

<p>メールが送信されました</p>

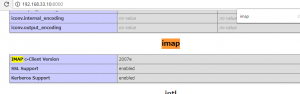

Postfixのdependency(remi)を解消してsudo yum install php-imap後、php.iniでextension=imap.soを追加しても、

全くphpinfo()に反映されず、vagrant haltしたり、php.iniを編集したり、

他のサイトを見ながら5時間くらい色々試しても全然ダメで、

あきらめてカタ焼きそば食って再度vagrant upしてみたら、入っている!? 何故だ? さっぱりわからん。

とりあえずエラーは出ない模様

$imapPath = '{imap.gmail.com:993/imap/ssl/novalidate-cert}INBOX';

$username = 'hoge@gmail.com';

$password = 'pass';

// try to connect

$inbox = imap_open($imapPath,$username,$password) or die('Cannot connect to Gmail:'.imap_last_error());

$mailHost = 'imap.googlemail.com';

$mailPort = 993;

$mailAccount = 'hoge@gmail.com';

$mailPassword = '';

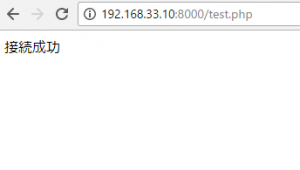

$mailBox = imap_open('{' . $mailHost . ':' . $mailPort . '/novalidate-cert/imap/ssl}' . "INBOX", $mailAccount, $mailPassword);

if (!$mailBox) {

echo '接続失敗';

}

echo '接続成功';

あれあれあれ

サーバーにグローバルなドメインが設定されていない場合、送受信できないとのこと。理由として、mail.hoge.localのドメインがLAN内のみ有効で世界のインターネット上では名前解決出来ない為。

個人情報に関わるからでしょうか、かなり厳しいですね。。

vimで /query で探した場合に、

押下”n”で次を検索