[vagrant@localhost laravel]$ php artisan make:model Document –migration

Model created successfully.

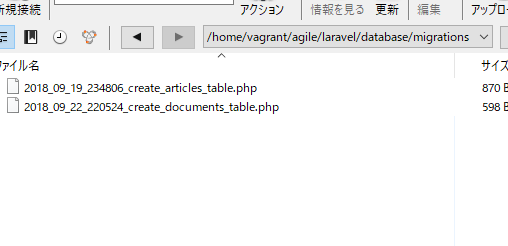

Created Migration: 2018_09_22_220524_create_documents_table

public function up()

{

Schema::create('documents', function (Blueprint $table) {

$table->increments('id');

$table->unsignedInteger('article_id'); // articleのid

$table->string('body');

$table->timestamps();

$table->foreign('article_id')->references('id')->on('articles')->onDelete('cascade');

});

}

コーディングと設計は使う脳みそが違いますね。先に設計をつくっておかないと、思いつきの適当なサービスになってしまいますな。

dateが型がよくわかりません。timestampsだと、yyyymmdd hh:mm:ssになりそうで。

public function up()

{

Schema::create('documents', function (Blueprint $table) {

$table->increments('id');

$table->unsignedInteger('article_id'); // articleのid

$table->string('mobile');

$table->string('published_at');

$table->text('body');

$table->timestamps();

$table->foreign('article_id')->references('id')->on('articles')->onDelete('cascade');

});

}

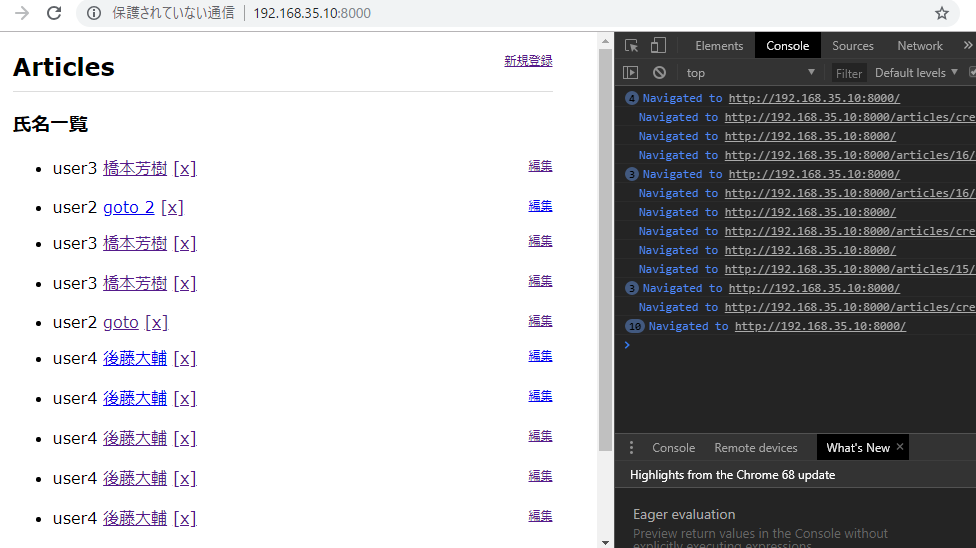

migrateします。

[vagrant@localhost laravel]$ php artisan migrate

Migrating: 2018_09_22_220524_create_documents_table

Migrated: 2018_09_22_220524_create_documents_table

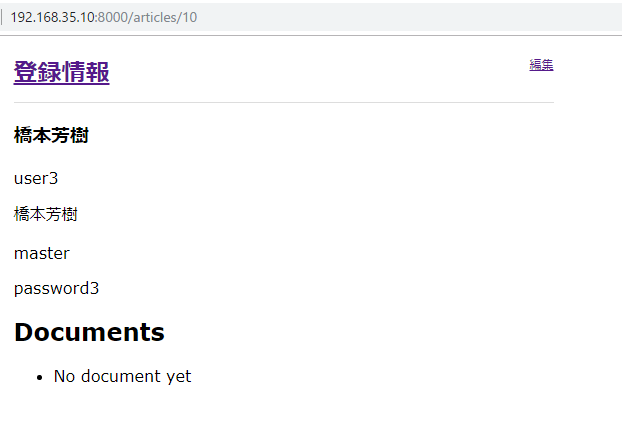

belong to

namespace App;

use Illuminate\Database\Eloquent\Model;

class Document extends Model

{

//

protected $fillable = ['mobile','published_at','body'];

public function article(){

return $this->belongsTo('App\Article')

}

}

article.phpの方

class Article extends Model

{

//

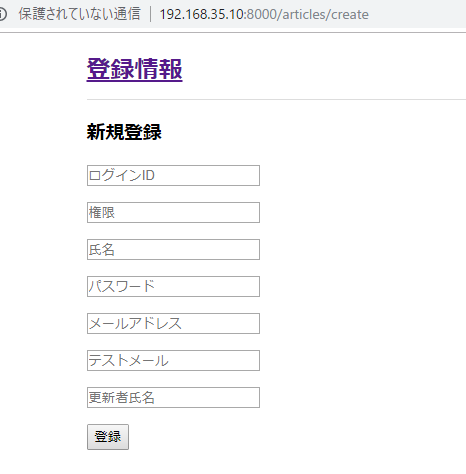

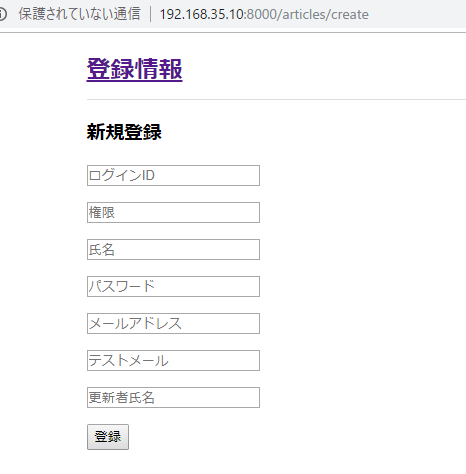

protected $fillable = ['login_id','role','name','password','mail','test_mail','updated_person'];

public function documments(){

return $this->hasMany('App\Document')

}

}

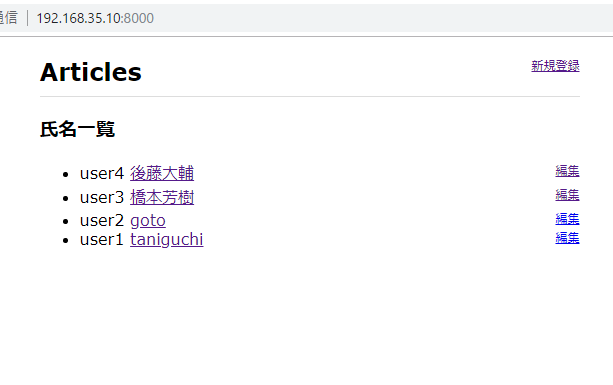

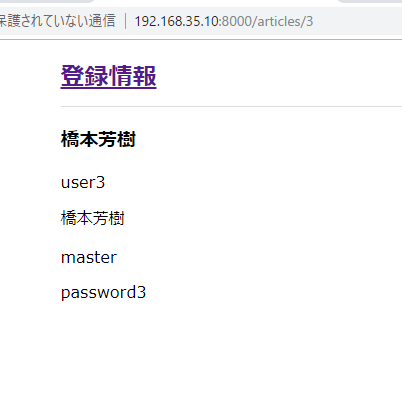

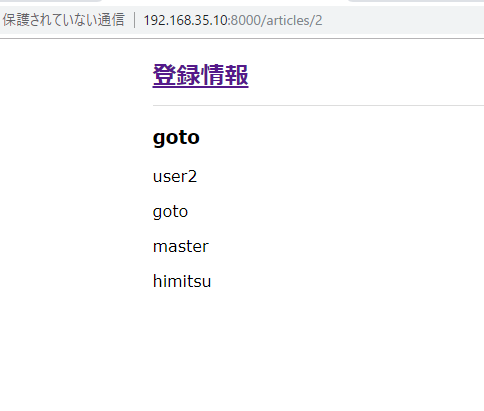

紐づけはできたっぽい

いけるもんだ。