$ composer create-project –prefer-dist laravel/laravel stancl

$ cd stancl

$ composer require laravel/jetstream

$ php artisan jetstream:install livewire

$ composer require stancl/tenancy

$ php artisan tenancy:install

$ php artisan migrate

mysql> show tables;

+————————+

| Tables_in_stancl |

+————————+

| domains |

| failed_jobs |

| migrations |

| password_resets |

| personal_access_tokens |

| sessions |

| tenants |

| users |

+————————+

8 rows in set (0.01 sec)

config/app.php

App\Providers\TenancyServiceProvider::class,

app/Models/Tenant.php

use Stancl\Tenancy\Database\Models\Tenant as BaseTenant;

use Stancl\Tenancy\Contracts\TenantWithDatabase;

use Stancl\Tenancy\Database\Concerns\HasDatabase;

use Stancl\Tenancy\Database\Concerns\HasDomains;

class Tenant extends BaseTenant implements TenantWithDatabase {

use HasDatabase, HasDomains;

}

config/tenancy.php

'tenant_model' => \App\Models\Tenant::class,

### Central routes

app/Providers/RouteServiceProvider.php

protected function mapWebRoutes(){

foreach($this->centralDomains() as $domain){

Route::middleware('web')

->domain($domain)

->namespace($this->namespace)

->group(base_path('routes/web.php'));

}

}

protected function mapApiRoutes(){

foreach($this->centralDomains() as $domain){

Route::prefix('api')

->domain($domain)

->middleware('api')

->namespace($this->namespace)

->group(base_path('routes/api.php'));

}

}

protected function centralDomains(): array {

return config('tenancy.central_domains');

}

public function boot()

{

$this->configureRateLimiting();

// $this->routes(function () {

// Route::prefix('api')

// ->middleware('api')

// ->namespace($this->namespace)

// ->group(base_path('routes/api.php'));

// Route::middleware('web')

// ->namespace($this->namespace)

// ->group(base_path('routes/web.php'));

// });

$this->mapWebRoutes();

$this->mapApiRoutes();

}

config/tenancy.php

'central_domains' => [

// '127.0.0.1',

'192.168.33.10',

// 'localhost',

],

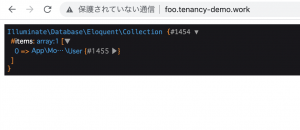

routes/tenant.php

Route::get('/', function () {

dd(\App\Models\User::all());

return 'This is your multi-tenant application. The id of the current tenant is ' . tenant('id');

});

move the users table migration (the file database/migrations/2014_10_12_000000_create_users_table.php or similar) to database/migrations/tenant.

php artisan tinker

>>> $tenant1->domains()->create([‘domain’ => ‘hoge.192.168.33.10’]);

=> Stancl\Tenancy\Database\Models\Domain {#4734

domain: “hoge.192.168.33.10”,

tenant_id: “hoge”,

updated_at: “2021-08-08 11:59:44”,

created_at: “2021-08-08 11:59:44”,

id: 1,

tenant: App\Models\Tenant {#4792

id: “hoge”,

created_at: “2021-08-08 11:59:35”,

updated_at: “2021-08-08 11:59:35”,

data: null,

tenancy_db_name: “tenanthoge”,

},

}

>>> $tenant2 = App\Models\Tenant::create([‘id’ => ‘bar’]);

=> App\Models\Tenant {#4794

id: “bar”,

data: null,

updated_at: “2021-08-08 11:59:50”,

tenancy_db_name: “tenantbar”,

}

>>> $tenant2->domains()->create([‘domain’ => ‘bar.192.168.33.10’]);

=> Stancl\Tenancy\Database\Models\Domain {#4786

domain: “bar.192.168.33.10”,

tenant_id: “bar”,

updated_at: “2021-08-08 11:59:55”,

created_at: “2021-08-08 11:59:55”,

id: 2,

tenant: App\Models\Tenant {#3788

id: “bar”,

created_at: “2021-08-08 11:59:50”,

updated_at: “2021-08-08 11:59:50”,

data: null,

tenancy_db_name: “tenantbar”,

},

}

$ php artisan make:seeder TenantTableSeeder

public function run()

{

App\Tenant::all()->runForEach(function () {

factory(App\User::class)->create();

});

}

mysql> select * from tenants;

+——+———————+———————+———————————–+

| id | created_at | updated_at | data |

+——+———————+———————+———————————–+

| bar | 2021-08-08 11:59:50 | 2021-08-08 11:59:50 | {“tenancy_db_name”: “tenantbar”} |

| foo | 2021-08-08 11:53:06 | 2021-08-08 11:53:06 | {“tenancy_db_name”: “tenantfoo”} |

| hoge | 2021-08-08 11:59:35 | 2021-08-08 11:59:35 | {“tenancy_db_name”: “tenanthoge”} |

+——+———————+———————+———————————–+

流れはわかったが、名前解決が出来ないな。。。

これも、ドメインでやるのかな。

1. お名前.comでドメインを取得してVPSにデプロイします。

2. お名前.com側ではDNS側でAレコードをワイルドカードで設定、VPS側ではCNAMEを設定して再度試します。

できたーーーーーーーーーーーーーーーーーーああああああああああああ

ウヒョーーーーーーーーー

database/tenant/* の中に、テナント用のmigration fileを作るわけね。

マルチテナントの開発の場合は、localhostや192.168.33.10などは名前解決できないので、ドメインを取得してテストする必要がある。

うむ、なかなか大変だわこれ。