$ sudo npm install -g create-react-app

$ create-react-app lottery-react

$ cd lottery-react

$ npm run start

http://192.168.34.10:3000/

$ sudo npm install -g yarn

$ yarn -v

1.22.5

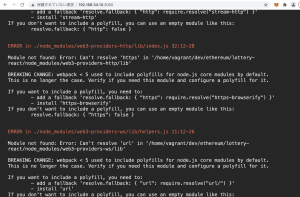

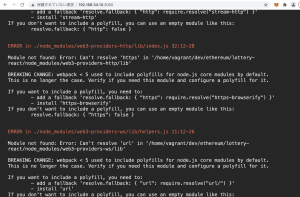

$ npm install –save web3@1.0.0-beta.26

$ cd ..

$ mkdir lottery

$ cd lottery

$ sudo npm install solc@0.4.17

$ npm install –save mocha ganache-cli web3@1.0.0-beta.26

$ npm install –save truffle-hdwallet-provider@0.0.3

contract/Lottery.sol

pragma solidity ^0.4.17;

contract Lottery {

address public manager;

address[] public players;

function Lottery () public {

manager = msg.sender;

}

function enter() public payable {

require(msg.value > .01 ether);

players.push(msg.sender);

}

function random() private view return (uint) {

return uint(keccak256(block.difficulty, now, players));

}

function pickWinner() public restricted {

uint index = random() % players.length;

players[index].transfer(this.balance);

players = new address[](0);

}

modifier restricted() {

require(msg.sender == manager);

_;

}

function getPlayers() public view returns(address[]){

return players;

}

}

compiler.js

const path = require('path');

const fs = require('fs');

const solc = require('solc');

const IndexPath = path.resolve(__dirname,'contracts', 'Lottery.sol');

const source = fs.readFileSync(IndexPath, 'utf8');

console.log(solc.compile(source,1));

ABIとは、Application Binary Interface

lottery.js

import web3 from './web3';

const address = '0xaC24CD668b4A6d2935b09596F1558c1E305F62F1';

const abi = [{

"constant": true,

"inputs": [],

"name": "manager",

"outputs": [{

"name": "",

"type": "address"

}],

"payable": false,

"stateMutability": "view",

"type": "function"

}, {

"constant": false,

"inputs": [],

"name": "pickWinner",

"outputs": [],

"payable": false,

"stateMutability": "nonpayable",

"type": "function"

}, {

"constant": true,

"inputs": [],

"name": "getPlayers",

"outputs": [{

"name": "",

"type": "address[]"

}],

"payable": false,

"stateMutability": "view",

"type": "function"

}, {

"constant": false,

"inputs": [],

"name": "enter",

"outputs": [],

"payable": true,

"stateMutability": "payable",

"type": "function"

}, {

"constant": true,

"inputs": [{

"name": "",

"type": "uint256"

}],

"name": "players",

"outputs": [{

"name": "",

"type": "address"

}],

"payable": false,

"stateMutability": "view",

"type": "function"

}, {

"inputs": [],

"payable": false,

"stateMutability": "nonpayable",

"type": "constructor"

}];

export default new web3.eth.Contract(abi, address);

web3.js

import Web3 from 'web3';

const web3 = new Web3(window.web3.currentProvider);

export default web3;

App.js

import React, { Component } from 'react';

import logo from './logo.svg';

import './App.css';

import web3 from './web3';

import lottery from './lottery';

class App extends Component {

state = {

manager: '',

player: [],

balance: '',

value: '',

messaget: ''

};

async componentDidMount() {

const manager = await lottery.methods.manager().call();

const players = await lottery.methods.getPlayers().call();

const balance = await web3.eth.getBalance(lottery.opetions.address);

this.setState({manager, players, balance});

}

onSubmit = async(event) => {

event.preventDefault();

const accounts = await web3.eth.getAccounts();

this.setState({message: 'Waiting on transaction success...'});

await lottery.methods.enter().send({

from: accounts[0],

value: web3.utils.toWei(this.state.value, 'ether')

});

this.setState({message: 'you have been entered!'});

};

onClick = async() => {

const accounts = await web3.eth.getAccounts();

this.setState({message: "waiting on transaction success..."});

await lottery.methods.pickWinner().send({

from: accounts[0]

});

this.setState({message: 'A winner has been picked'});

};

render() {

return (

<div>

<h2>Lottery Contract</h2>

<p>

This contract is managed by {this.state.manager}.

There are currently {this.state.players.length} peple entered,

competing to win {web3.utils.fromWei(this.state.balance, 'ether')}

</p>

<hr />

<form onSubmit={this.onSubmit}>

<h4>Want to try your luck?</h4>

<div>

<label>Amount of ether to enter</label>

<input

value = {this.state.value}

onChange={event => this.setState({ value: event.target.value })}

/>

</div>

<button>Enter</button>

</form>

<hr />

<h4>Ready to pick a winner?</h4>

<button onClick={this.onClick}>Pick a winner!</button>

<hr />

<h1>{this.state.message}</h1>

</div>

);

}

}

export default App;

なるほど、全体の流れはわかった