The best way to normalize price data so that all prices start at 1.0

df1 = df1/df1[0]

import os

import pandas as pd

import matplotlib.pyplot as plt

def plot_selected(df, columns, start_index, end_index):

def symbol_to_path(symbol, base_dir="data"):

"""Return CSV file path given ticker symbol."""

return os.path.join(base_dir, "{}.csv".format(str(symbol)))

def get_data(symbols, dates):

df = pd.DataFrame(index=dates)

if 'SPY' not in symbols:

symbols.insert(0, 'SPY')

for symbol in symbols:

df_temp = pd.read_csv(symbol_to_path(symbol), index_col='Date',

parse_dates=True, usecols=['Date', 'Adj Close'], na_values=['nan'])

df_temp = df_temp.rename(colums={'Adj Close': symbol})

df = df.join(df_temp)

if symbol = 'SPY':

df = df.dropna(subset=["SPY"])

return df

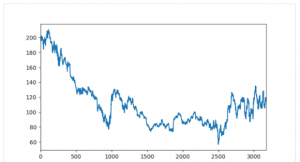

def plot_data(df, title="Stock prices"):

ax = df.plot(title=title, fontsize=12)

ax.set_xlabel("Date")

ax.set_ylabel("Price")

plt.show()

def test_run():

dates = pd.date_range('2010-01-01', '2010-12-31')

symbols = ['GOOG', 'IBM', 'GLD']

df = get_data(symbols, dates)

plot_selected(df, ['SPY', 'IBM'], '2010-03-01', '2010-04-01')

if __name__ == "__main__":

test_run()