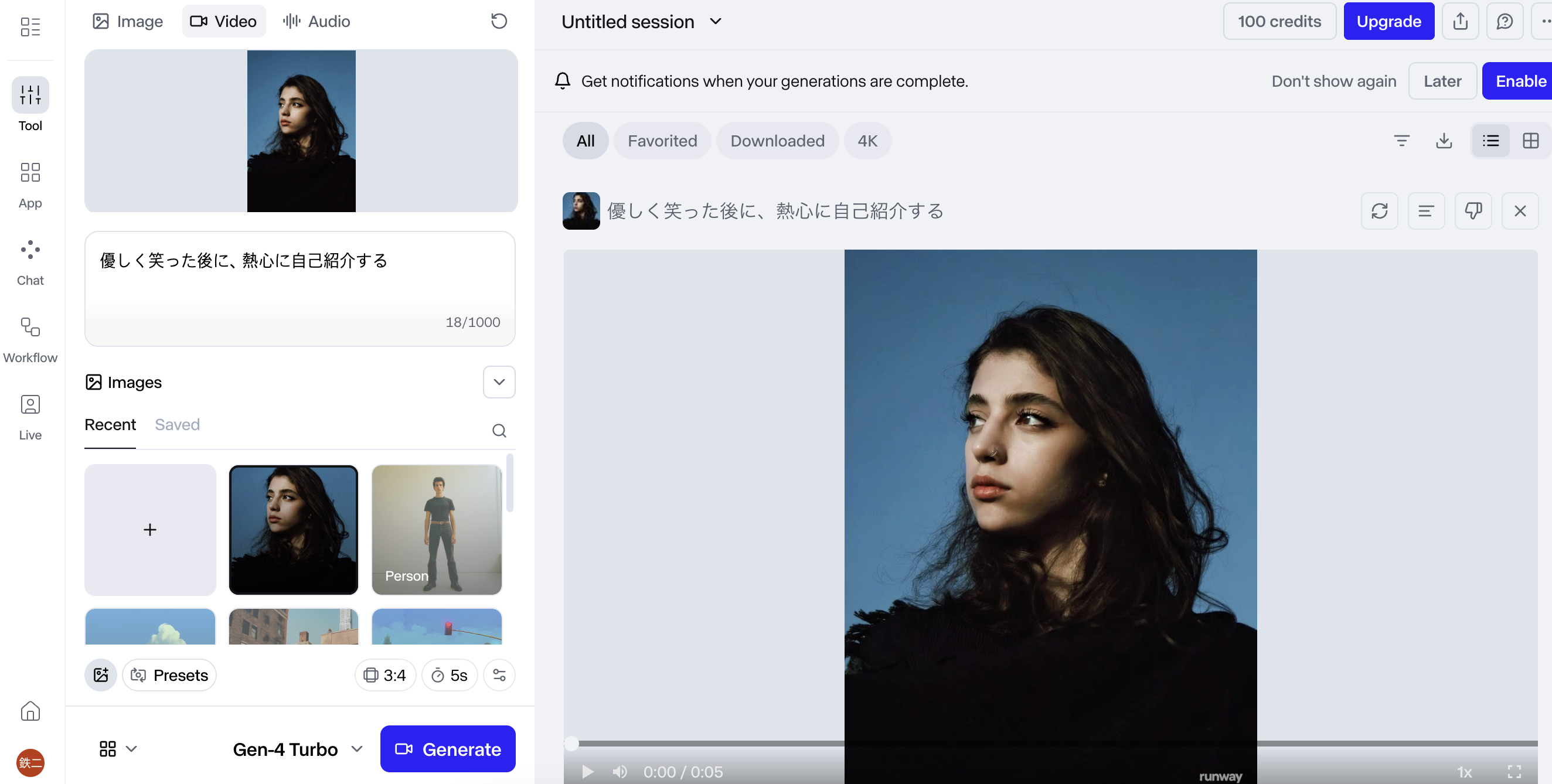

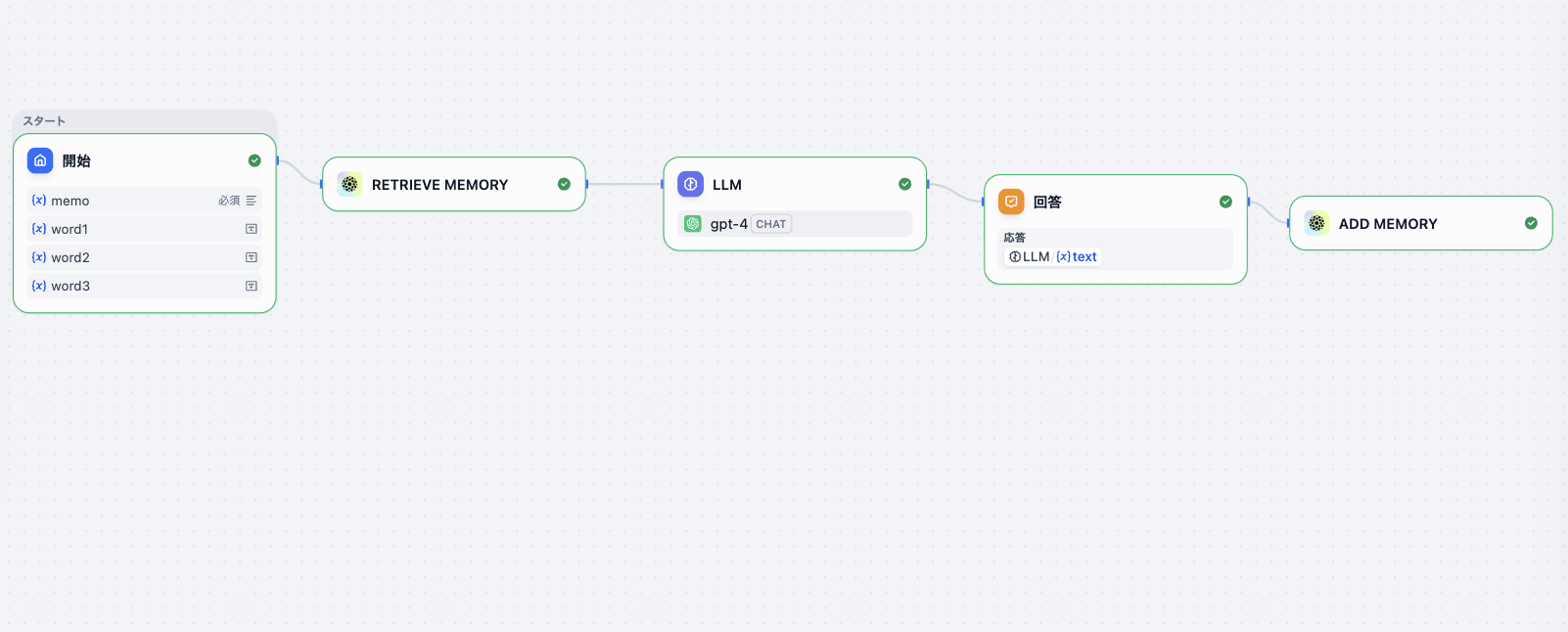

何かアプリケーションを開発する際に、デザインの方向性を決める際に作るビジュアルデザイン/デザインシステムは何を作るのか?

素人(自分)が作ったもの

– デザインの方向性

– トンマナ

– カラーパレット

– タイポグラフィ

– 参考サイト

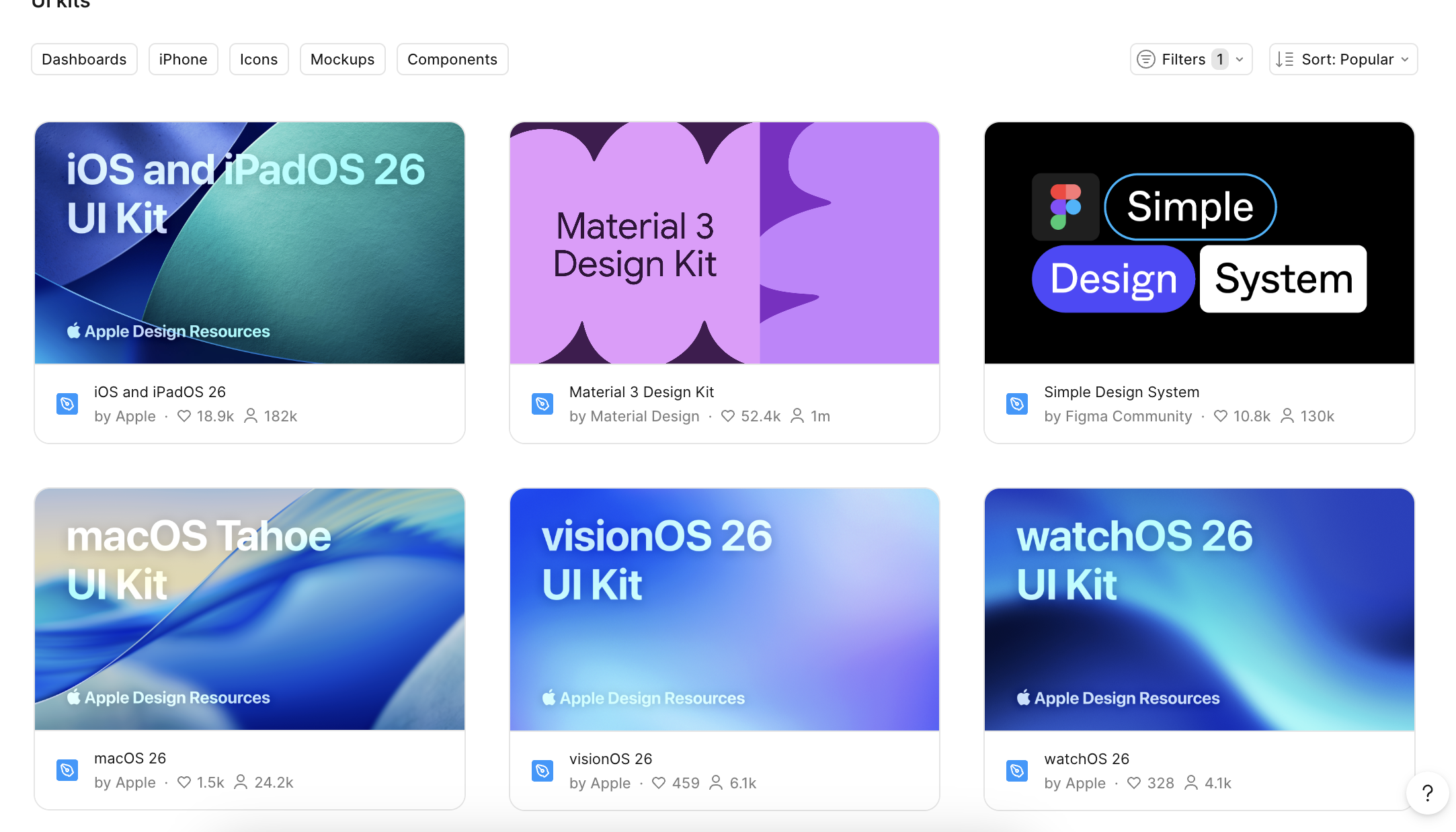

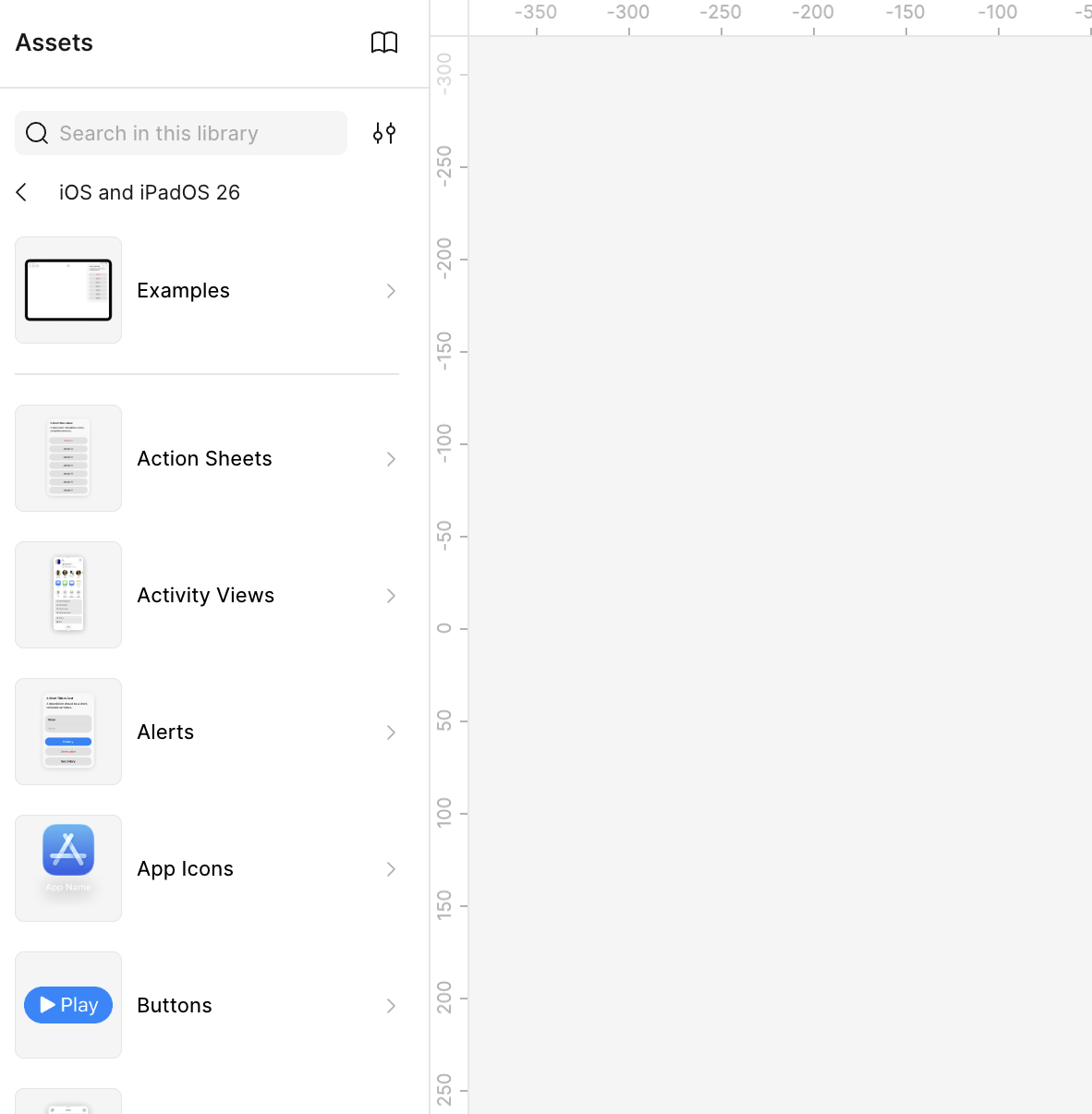

プロが作ると、こういうアウトプットが出てくる。どちらかというともう少し粒度が細かなものを高品質に作ってくるイメージですね。

—-

Typography

– Name, Font, Weight, size, Line height, PLATEGRAM

– H1, H2, H3, T1, T2, T3, C1, C3, C4, C5

Button

– default, hover/pressed, disabled, loding

Inputs & Form

– Base/Multi, Input States(default, focus, error, disabled), Form Controls(password, texture, select)

Color Pallet

Core

Neutral 6種類

Status (error, warning, success)

Brand Layer

Common Primary 6種類

Spacing

Border Radius

Elevation