Classification

-> regression, ranking, reinforcement learning, detection

Logistic Classifier

WX + b = y

-> a, b, c

p = 0.7, 0.2, 0.1

p = probability which closed to 1.0 – 0

S(yi) = e^yi / Σi e^yj

Any scores turned to probability

随机应变 ABCD: Always Be Coding and … : хороший

Classification

-> regression, ranking, reinforcement learning, detection

Logistic Classifier

WX + b = y

-> a, b, c

p = 0.7, 0.2, 0.1

p = probability which closed to 1.0 – 0

S(yi) = e^yi / Σi e^yj

Any scores turned to probability

<uses-permission android:name="android.hardware.camera"> <uses-feature android:name="android.hardware.camera" android:required="false"/>

ObjectAdapter

Model, Presenter, View

Streaming media

Google Cast

-multi device

-android, ios, web

Android TV

-extended android app

-runs on TV

Platform

android -> android TV

Android TV platform

1.declare the TV activity in the manifest

2.Update UI with the leanback library

-BrowseFragment

-DetailsFragment

-SearchFragment

3.Content discovery

-Apps/Games row

-Recomendations

-Search

https://developers.google.com/cast/docs/developers

Getting started

https://cast.google.com/publish/

-classification

-clustering

-dimensionality reduction

-graph analysis

Reinforcement-learning basics

Agent, Environment

a, r, s

CPU1 CPU2 CPU3

#include <pthread.h>

#include <stdlib.h>

#include <string.h>

#include <stdio.h>

#include <assert.h>

void* f1(void* arg){

int whos_better;

whos_better = 1;

while(1)

printf("Thread 1: thread %d is better.\n", whos_better);

return NULL;

}

void* f2(void* arg){

int whos_better;

whos_better = 2;

while(1)

printf("Thread 2: thread %d is better.\n", whos_better);

return NULL;

}

int main(int argc, char **argv){

pthread_t th1, th2;

pthread_create(&th1, NULL, f1, NULL);

pthread_create(&th2, NULL, f2, NULL);

pthread_join(th1, NULL);

pthread_join(th2, NULL);

pthread_exit(NULL);

}

Key Abstractions

1. file

2. file name

3. Directory Tree

#include#include #include #include int main(int argc, char **argv){ int fd; ssize_t len; char *filename; int key, srch_key, new_value; if(argc < 4){ fprintf(stderr, "usage: sabotage filename key value\n"); exit(EXIT_FAILURE); } filename = argv[1]; srch_key = strtol(argv[2], NULL, 10); new_value = strtol(argv[3], NULL, 10); }

CPU

Memory: fast, random access, temporary

Disk: slow, sequential access, durable

CPU Register <- cache -> Main Memory

10 cycle latency, 256 bytes / cycle

Memory Hierarchy

CPU

Main Memory

Write Policy

Hit:

– write-through

– write-back

Miss:

– write-allocate

– no-write allocate

Virtual Address Abstraction

Kernel addresses, user stack, heap, uninitialized data, initialized data, code

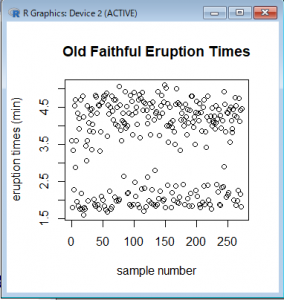

> library(ggplot2) > names(faithful) [1] "eruptions" "waiting" > plot(faithful$eruptions, xlab = "sample number", ylab = "eruption times (min)", main = "Old Faithful Eruption Times")

qplot(x = waiting, data = faithful, binwindth = 3, main = "Waiting time to next eruption(min)") ggplot(faithful, aes(x = waiting)) + geom_histogram(bindwidth = 1)

> names(mtcars)

[1] “mpg” “cyl” “disp” “hp” “drat” “wt” “qsec” “vs” “am” “gear”

[11] “carb”

a = 3.2

a = "a string"

print("The variable 'a' stores:"); print(a)

a = 10; b = 5; c = 1

if (a < b){

d = 1

}else if (a == b){

d = 2

}else{

d = 3

}

d

stopifnot(d==3)

sum = 0

i = 1

while(i <= 10){

sum = sum + i

i = i + 1

}

stopifnot(sum==55)

mySum = function(a,b){

return(a + b)

}

x = vector(length=3, mode="numeric")

y = c(4,3,3)

stopifnot( x == c(0,0,0))

stopifnot(length(y) == 3)

x[1] = 2

x[3] = 1

stopifnot( x == c(2,0,1) )

a = 2*x + y

stopifnot( a == c(8,3,4) )

a = a - 1

stopifnot( a == c(7,2,3) )

stopifnot( (a>=7) == c(TRUE,FALSE,FALSE))

stopifnot( (a==2) == c(FALSE,TRUE,FALSE))

mask = c(TRUE,FALSE,TRUE)

stopifnot( a[mask] == c(7,3) )

indices = c(1,3)

stopifnot( a[indices] == c(7,3))

stopifnot( a[c(-1,-3)] == c(2) )

stopifnot( any(c(FALSE,TRUE,FALSE)) )

stopifnot( all(c(TRUE,TRUE,TRUE)) )

stopifnot( which(c(TRUE,FALSE,TRUE)) == c(1,3) )

b = rep(3.2, times=5)

stopifnot( b == c(3.2, 3.2, 3.2, 3.2, 3.2))

w = seq(0,3)

stopifnot(w == c(0,1,2,3))

x = seq(0,1,by=0.2)

stopifnot(x == c(0.0, 0.2, 0.4, 0.6, 0.8, 1.0))

y = seq(0,1,length.out=3)

stopifnot( x == c(0.0, 0.5, 1.0) )

z = 1:10

stopifnot(z == seq(1,10,by=1))

sum = 0

for(i in z){

sum = sum + i

}

stopifnot(sum == 55)

x = 1:10

f = function(a){

a[1] = 10

}

f(x)

stopifnot(x == 1:10)

[,1][.2]

y `= [,1] 1 5

[,2] 2 6

2 * y + 1

y `= [,1] 3 11

[,2] 5 13

y %*% Y

y `= [,1] 11 35

[,2] 14 46

outer(x[,1], x[,1])

[,1] [,2] [,3] [,4] [,5]

[1,] 1 5 9 13 17

[2,] 2 6 10 14 18

[3,] 3 7 11 15 19

[4,] 4 8 12 16 20

rbind(x[1,], x[1,])

rbindは縦に結合

[,1] [,2] [,3] [,4] [,5]

[1,] 1 5 9 13 17

[2,] 1 5 9 13 17

cbind(x[1,], x[1,])

L = list(name = ‘John’, age=55, no.children=2, children.ages = c(15, 18))

names(L) name age no.children children.ages

L[[2]] 55

L$name John

L[‘name’] John

L$children.ages[2]

L[[4]][2]

names(R) = c(“NAME”, “AGE”, “SALARY”)

if-Else

a = 10; b = 5; c = 1

if (a < b){

d = 1

} else if (a == b){

d = 2

} else {

d = 3

}

print(d)

R for loop

total = function(n){

sum = 0

for(i in 1:100){

sum = sum + i

}

print(sum)

return(sum)

}

total(100)

total = function(n){

sum = 5050

num = n

repeat {

sum = sum - num

num = num - 1

if(sum == 0)break

}

return(sum)

}

total = function(){

sum = 0

a = 1

b = 10

while (a