意気揚々とvirtual boxインストールを試みましたが、

何いいいいいいい

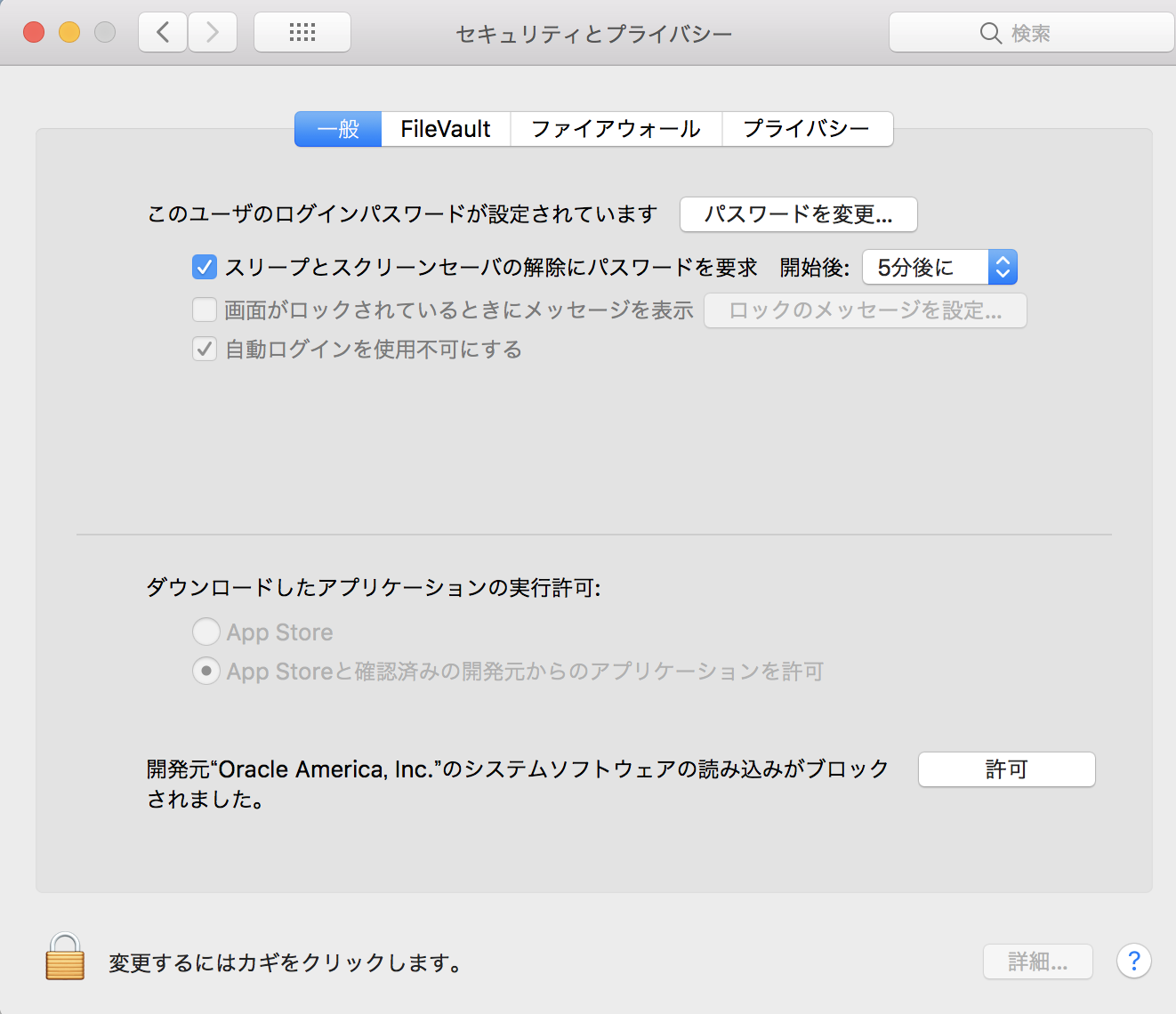

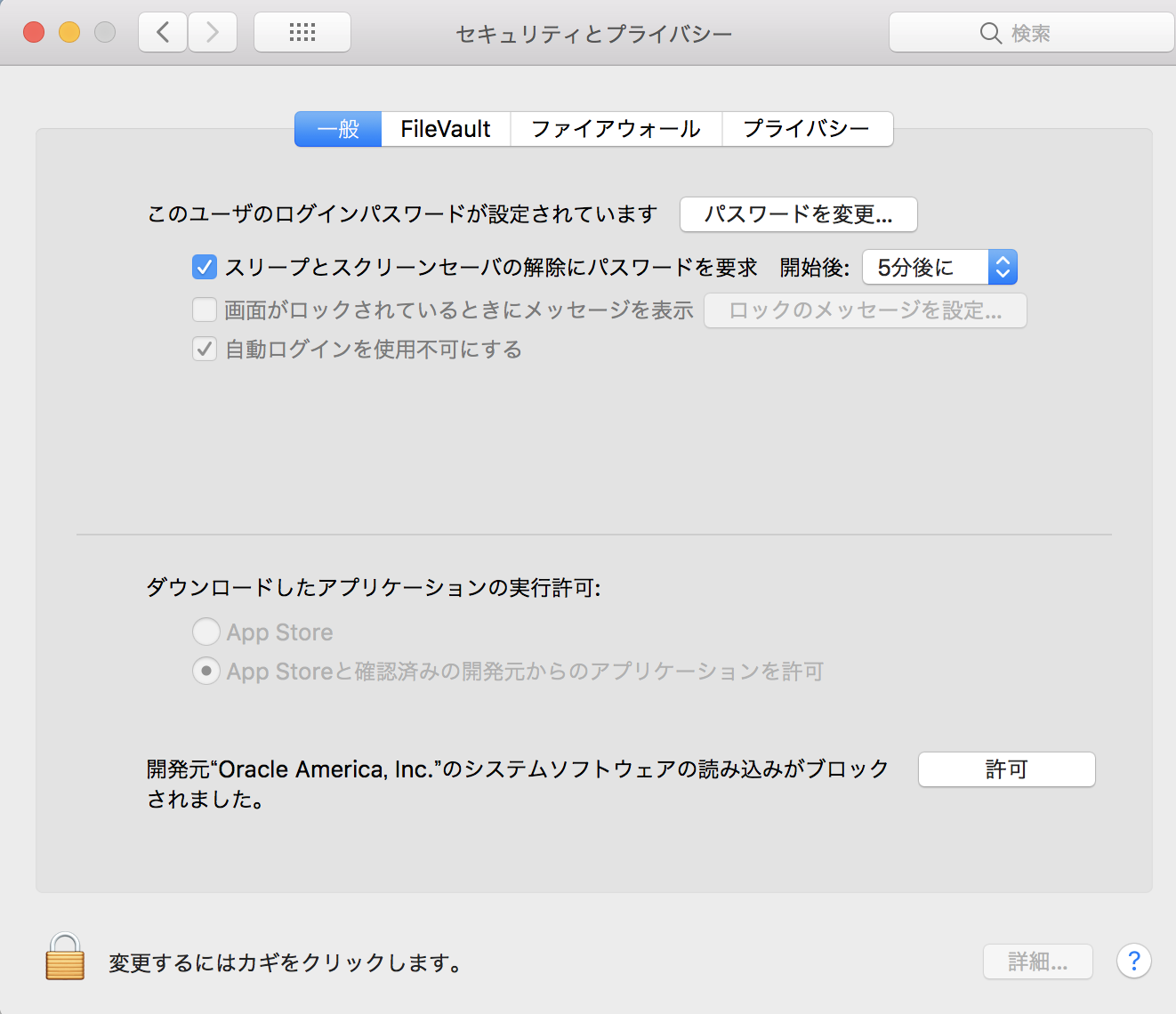

セキュリティとプライバシーからoracleを許可します。

再度インストールします。

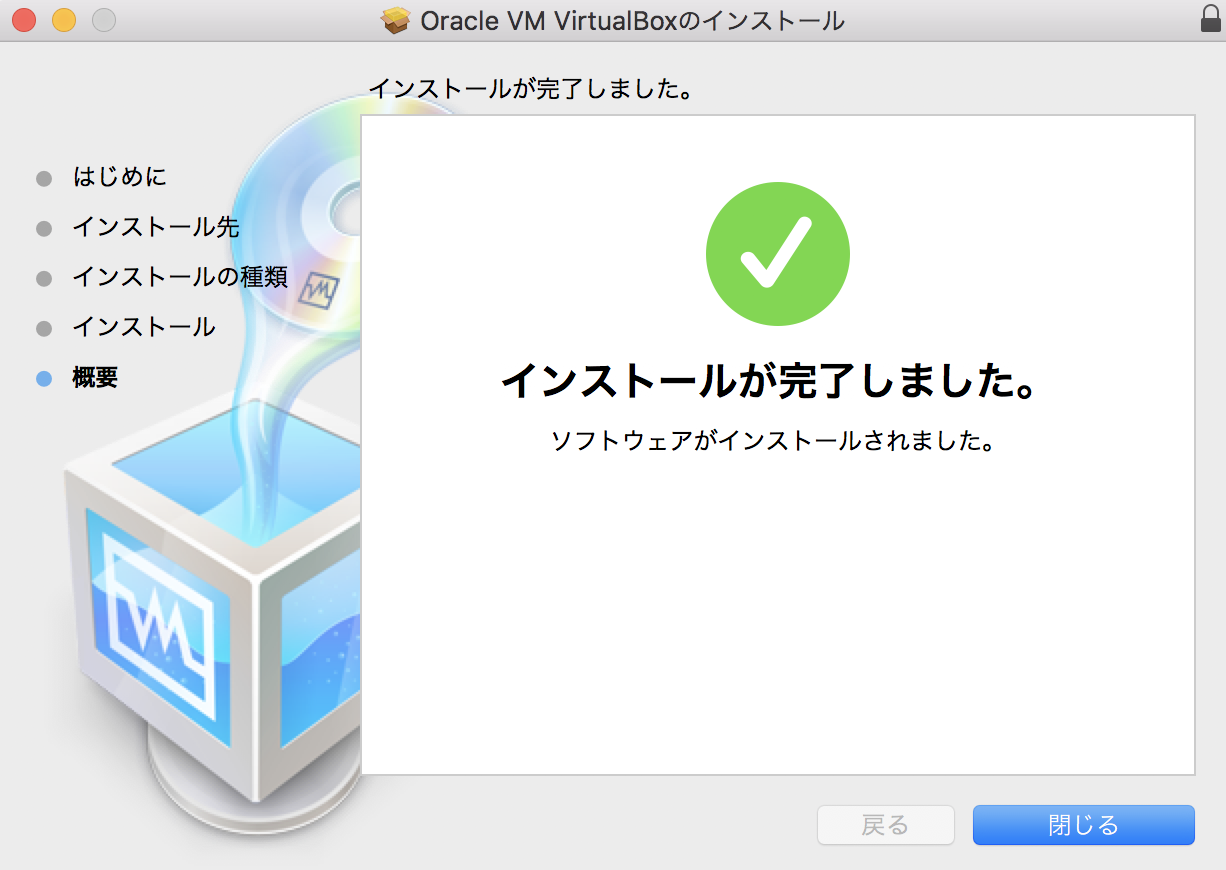

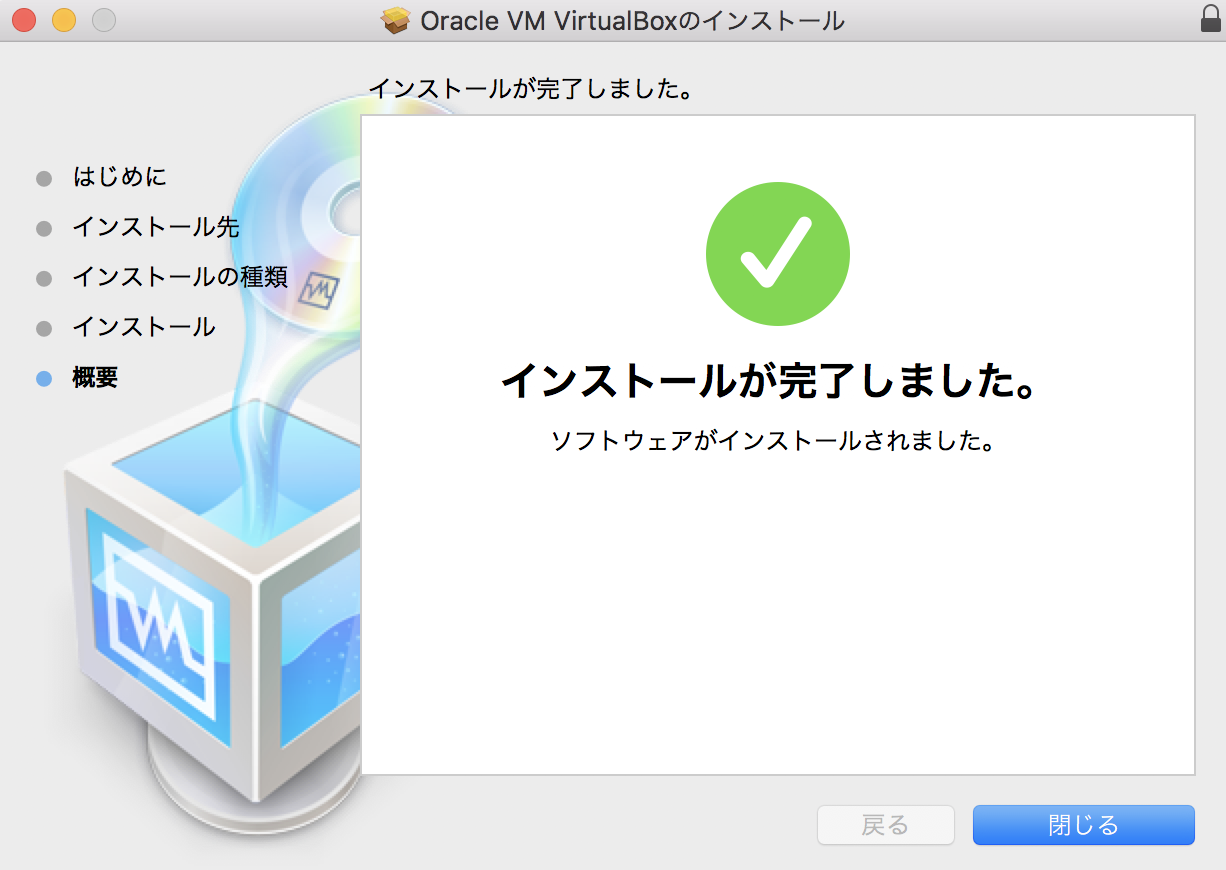

いけましたね。

随机应变 ABCD: Always Be Coding and … : хороший

意気揚々とvirtual boxインストールを試みましたが、

何いいいいいいい

セキュリティとプライバシーからoracleを許可します。

再度インストールします。

いけましたね。

開発のチームビルディングを考えるため、Team Geek (Google Brain W.Fitzpatrick)を購入して読みました。

https://amzn.to/2JUsl7R

Googleが 謙虚、尊敬、信頼 を大事にしているとは驚きだった。

もっと技術的な思想があるかと思ったら、割とteamでの姿勢的なことなんだ。。

どこの開発現場でも一緒なんだなー

Determine the coding style of the specified file based on the set rules.

This function is an extensive block algorithm such as CBC, OFB, CFB, ECB cipher modes DES, TripleDES, Blowfish (default), 3-WAY, SAFER-SK64, SAFER-SK128, TWOFISH, TEA, RC2 and GOST. Interface to the mcrypt library to support. In addition, it supports RC6 and IDEA, which are described as “not free”.

Check whether the Chef Client / Chef Solo exists in the instance or the expected version when starting Vagrant, and if not, automatically install it on the instance using Chef’s omnibus installer.

# -*- mode:ruby -*-

# vi: set ft=ruby :

Vagrant.configure("2") do |config|

config.vm.box = "<your_box_name_here>"

config.vm.network :private_network, ip: "192.168.33.33"

config.omnibus.chef_version = "11.4.0"

config.vm.provision :chef_solo do |chef|

chef.cookbooks_path = "./cookbooks"

chef.add_recipe "apache"

end

end

Berkshelf is a tool to manage Chef cookbooks and their dependencies.

If you define a cookbook to be used for the definition file, you can eliminate depedencies automatically from the repository.

[vagrant@localhost test]$ gem install mixlib-archive -v 0.4.20

[vagrant@localhost test]$ gem install mixlib-config -v 2.2.18

[vagrant@localhost test]$ gem install berkshelf

Fetching: fuzzyurl-0.9.0.gem (100%)

Successfully installed fuzzyurl-0.9.0

Fetching: chef-config-15.1.36.gem (100%)

Successfully installed chef-config-15.1.36

Fetching: builder-3.2.3.gem (100%)

Successfully installed builder-3.2.3

Fetching: erubis-2.7.0.gem (100%)

Successfully installed erubis-2.7.0

Fetching: gssapi-1.3.0.gem (100%)

Successfully installed gssapi-1.3.0

Fetching: gyoku-1.3.1.gem (100%)

Successfully installed gyoku-1.3.1

Fetching: httpclient-2.8.3.gem (100%)

Successfully installed httpclient-2.8.3

Fetching: little-plugger-1.1.4.gem (100%)

Successfully installed little-plugger-1.1.4

Fetching: logging-2.2.2.gem (100%)

Successfully installed logging-2.2.2

Fetching: nori-2.6.0.gem (100%)

Successfully installed nori-2.6.0

Fetching: rubyntlm-0.6.2.gem (100%)

Successfully installed rubyntlm-0.6.2

Fetching: winrm-2.3.2.gem (100%)

Successfully installed winrm-2.3.2

Fetching: rubyzip-1.2.3.gem (100%)

Successfully installed rubyzip-1.2.3

Fetching: winrm-fs-1.3.2.gem (100%)

Successfully installed winrm-fs-1.3.2

Fetching: train-core-2.1.13.gem (100%)

ERROR: Error installing berkshelf:

There are no versions of train-core (>= 2.0.12, ~> 2.0) compatible with your Ruby & RubyGems. Maybe try installing an older version of the gem you’re looking for?

train-core requires Ruby version >= 2.4. The current ruby version is 2.3.0.

なにいいいいいいいいいいいいいいいいいいいい

$ zip -r filename directoryname

[vagrant@localhost test]$ ls

test

[vagrant@localhost test]$ zip -r sample test

-bash: zip: コマンドが見つかりません

あれ、zipが入っていない?!

[vagrant@localhost test]$ sudo yum install zip

インストール:

zip.x86_64 0:3.0-1.el6_7.1

完了しました!

zipファイルを作ります。

[vagrant@localhost test]$ zip -r sample.zip test

adding: test/ (stored 0%)

[vagrant@localhost test]$ ls

sample.zip test

お!?

$ zip -e -v

->オプション

-e: –encrypt: 暗号化する

-v: –verbose: 動作中のメッセージを詳しくする

ああああああああああああああああああ、

まだまだまだまだあるううううううううううううううううううう

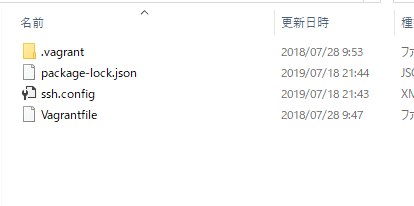

VagrantfileがあるディレクトリでOpenSSHの設定を行う。

>vagrant ssh-config Host default HostName 127.0.0.1 User vagrant Port 2222 UserKnownHostsFile /dev/null StrictHostKeyChecking no PasswordAuthentication no IdentityFile C:/Users/hoge/MyVagrant/Cent/.vagrant/machines/default/virtualbox/private_key IdentitiesOnly yes LogLevel FATAL

ん?なんじゃこりゃ。

取得できるファイルをssh.configに書き込み。

>vagrant ssh-config > ssh.config

>scp -F ssh.config vagrant@default:package-lock.json ./

package-lock.json 100% 11KB 11.5KB/s 00:00

まじこれ?

要するにvagrantにscp接続できたってこと?

うおおおおおおおおおおおお、全然追いつける気がしない。

OpenSSH(Open Secure Shell) is software for using the SSH protocol, including an SSH server and an SSH client. OpenSSH is developed by the OpenBSD project and released under the BSD license. There are several other implementations of SSH, including the original SSH implementation SSH Tectia, but as of 2008, OpenSSH is the most used SSH implementation in the world.

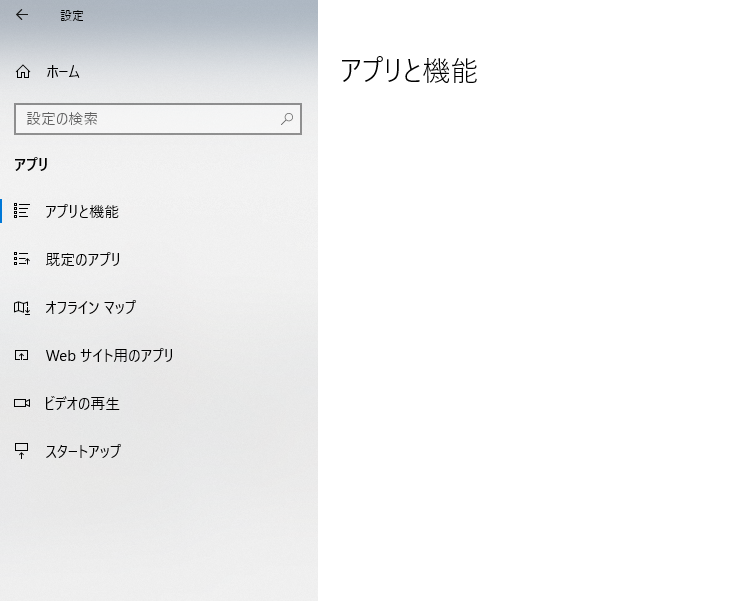

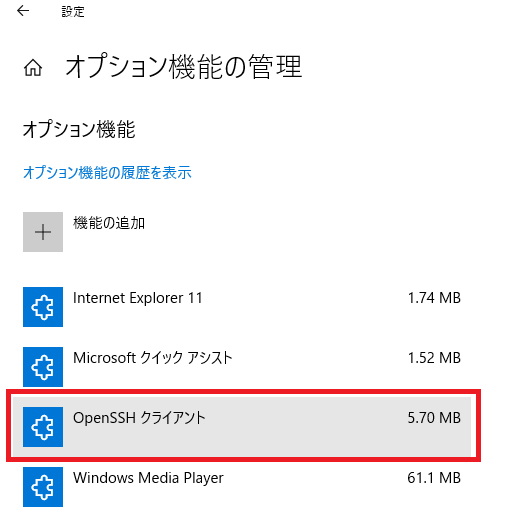

Windows 10に OpenSSHが正式に組み込まれて使用できるとのこと。

アプリと機能を開きます。

オプション機能の管理→OpenSSHクライアント

あ、既にインストールされていますね。

続いて、Windows PowerShellを開きます。

> ssh localhost

ssh: connect to host localhost port 22: Connection refused

> New-NetFirewallRule -Protocol TCP -LocalPort 22 -Direction Inbound -Action Allow – DisplayName SS

H

New-NetFirewallRule : 引数 ‘-‘ を受け入れる位置指定パラメーターが見つかりません。

発生場所 行:1 文字:1

+ New-NetFirewallRule -Protocol TCP -LocalPort 22 -Direction Inbound -A …

+ ~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~

+ CategoryInfo : InvalidArgument: (:) [New-NetFirewallRule]、ParameterBindingException

+ FullyQualifiedErrorId : PositionalParameterNotFound,New-NetFirewallRule

> New-NetFirewallRule -Protocol TCP -LocalPort 22 -Direction Inbound -Action Allow -DisplayName SSH

New-NetFirewallRule : アクセスが拒否されました。

発生場所 行:1 文字:1

+ New-NetFirewallRule -Protocol TCP -LocalPort 22 -Direction Inbound -A …

+ ~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~

+ CategoryInfo : PermissionDenied: (MSFT_NetFirewallRule:root/standardcimv2/MSFT_NetFirewallRule) [New-Ne

tFirewallRule], CimException

+ FullyQualifiedErrorId : Windows System Error 5,New-NetFirewallRule

アクセスが拒否されました。

なんでやー

The “SCP” command is an abbreviation of “Secure Copy”, and it uses ssh to encrypt the communication between the remote host and the local host, and then copy and send the file.