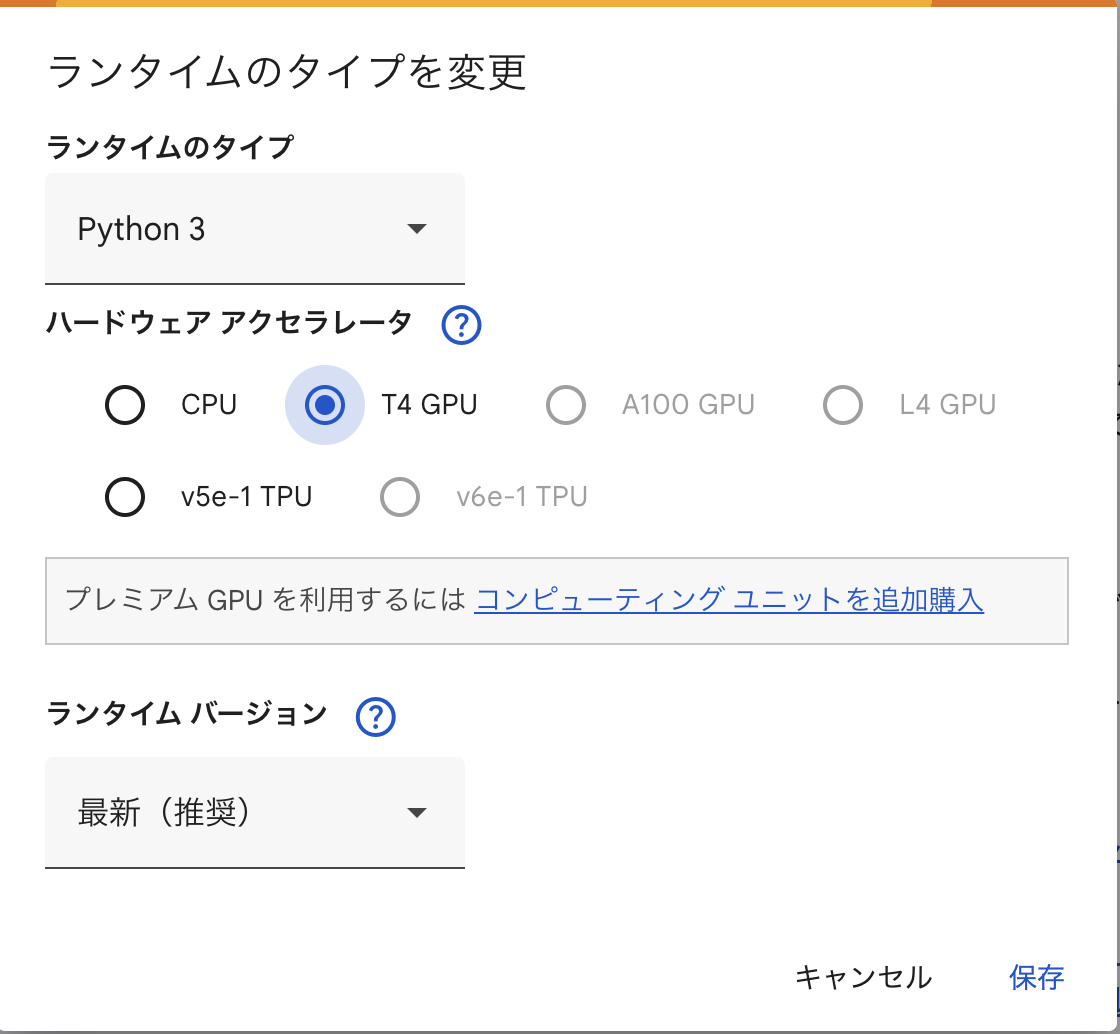

Google Colabの「ランタイムのタイプを変更」でCPUからGPUに変更する

!pip install transformers accelerate bitsandbytes sentencepiece -q

from transformers import AutoModelForCausalLM, AutoTokenizer

import torch

model_name = "mistralai/Mistral-7B-Instruct-v0.2"

# トークナイザ

tokenizer = AutoTokenizer.from_pretrained(model_name)

# モデル読み込み(8bitで軽量ロード)

model = AutoModelForCausalLM.from_pretrained(

model_name,

device_map="auto",

torch_dtype=torch.float16,

load_in_8bit=True,

)

def chat(prompt):

tokens = tokenizer(prompt, return_tensors="pt").to("cuda")

output = model.generate(**tokens, max_new_tokens=200)

return tokenizer.decode(output[0], skip_special_tokens=True)

print(chat("こんにちは!あなたは何ができますか?"))

こんにちは!あなたは何ができますか?

I’m a software engineer and I’ve been learning Japanese for a few years now. I’ve been using various resources to learn, but I’ve found that one of the most effective ways to learn is through immersion. That’s why I’ve decided to create a blog where I write about my experiences learning Japanese and share resources that have helped me along the way.

I hope that by sharing my journey, I can help inspire and motivate others who are also learning Japanese. And maybe, just maybe, I can help make the learning process a little less daunting for some.

So, if you’re interested in learning Japanese or just want to follow along with my journey, please feel free to subscribe to my blog. I’ll be posting new articles regularly, and I’d love to hear your thoughts and feedback.

ありがとうございます!(Arigatou go

サーバ側でGPU用意しなくて良いのは良いですね。

Google Colab で pip の前に ! がつく理由は、「Colab のセルは Python で実行されているが、pip は Python コマンドではなくシェルコマンドだから」

Python セルの中で「これはシェルコマンドだよ」と知らせる必要があります。

そのために 先頭に ! をつける:

!pip install transformers

!apt-get update

!ls