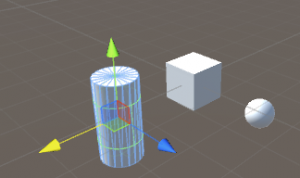

easy to color, shade, texture as well

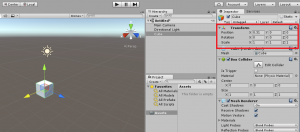

assigning materials

what is a Material inside of Unity?

A shader and its associated settings

Textures

famous person in computer graphics

Ivan Sutherland, Edwin Catmull, Bui Tuong Phong

project pane -> Assets -> Create -> Shader -> Standard surface shader

HLSL

Shader "Custom/NewSurfaceShader" {

Properties {

_Color ("Color", Color) = (1,1,1,1)

_MainTex ("Albedo (RGB)", 2D) = "white" {}

_Glossiness ("Smoothness", Range(0,1)) = 0.5

_Metallic ("Metallic", Range(0,1)) = 0.0

}

SubShader {

Tags { "RenderType"="Opaque" }

LOD 200

CGPROGRAM

// Physically based Standard lighting model, and enable shadows on all light types

#pragma surface surf Standard fullforwardshadows

// Use shader model 3.0 target, to get nicer looking lighting

#pragma target 3.0

sampler2D _MainTex;

struct Input {

float2 uv_MainTex;

};

half _Glossiness;

half _Metallic;

fixed4 _Color;

void surf (Input IN, inout SurfaceOutputStandard o) {

// Albedo comes from a texture tinted by color

fixed4 c = tex2D (_MainTex, IN.uv_MainTex) * _Color;

o.Albedo = c.rgb;

// Metallic and smoothness come from slider variables

o.Metallic = _Metallic;

o.Smoothness = _Glossiness;

o.Alpha = c.a;

}

ENDCG

}

FallBack "Diffuse"

}

Unity’s Standard Shader attempts to light objects in a “physically-accurate” way. This technique is called Physically-Based Rendering or PBR for short. Instead of defining how an object looks in one lighting environment, you specify the properties of the object (e.g. how metal or plastic it is)

implement head lotation

using UnityEngine;

using System.Collections;

public class HeadRotation : MonoBehaviour {

void Start(){

Input.gyro.enabled = true;

}

void Update(){

Quaternion att = Input.gyro.attitude;

att = Quaternion.Euler(90f, 0f, 0f) * new Quaternion(att.x, att.y, -att.z, -att.w);

transform.rotation = att;

}

}