最近のトレンドはtransformarを用いた自然言語処理とのことで、wav2vecを使いたい

### ライブラリのinstall

$ pip3 install transformers datasets librosa

main.py

# -*- coding: utf-8 -*-

#! /usr/bin/python3

import librosa

import matplotlib.pyplot as plt

from IPython.display import display, Audio

import librosa.display

import numpy as np

import torch

from transformers import Wav2Vec2Processor, Wav2Vec2ForCTC

from datasets import load_dataset

import soundfile as sf

processor = Wav2Vec2Processor.from_pretrained("facebook/wav2vec2-base-960h")

model = Wav2Vec2ForCTC.from_pretrained("facebook/wav2vec2-base-960h")

def map_to_array(batch):

speech, sr_db = sf.read(batch["file"])

batch["speech"] = speech

batch['sr_db'] = sr_db

return batch

ds = load_dataset("patrickvonplaten/librispeech_asr_dummy","clean",split="validation")

ds = ds.map(map_to_array)

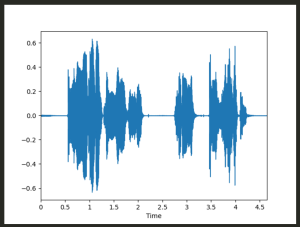

librosa.display.waveplot(np.array(ds['speech'][0]), sr=ds['sr_db'][0])

plt.savefig('01')

display(Audio(np.array(ds['speech'][0]), rate=ds['sr_db'][0]))

input_values = processor(ds["speech"][0], return_tensors="pt").input_values logits = model(input_values).logits predicted_ids = torch.argmax(logits, dim=-1) transcription = processor.decode(predicted_ids[0]) print(transcription)

$ python3 main.py

// 省略

A MAN SAID TO THE UNIVERSE SIR I EXIST

なんだこれええええええええええええええええええ

音声をvector graphicにしてるのはわかるが、、、