https://www.w3.org/TR/webrtc/

1. Introduction

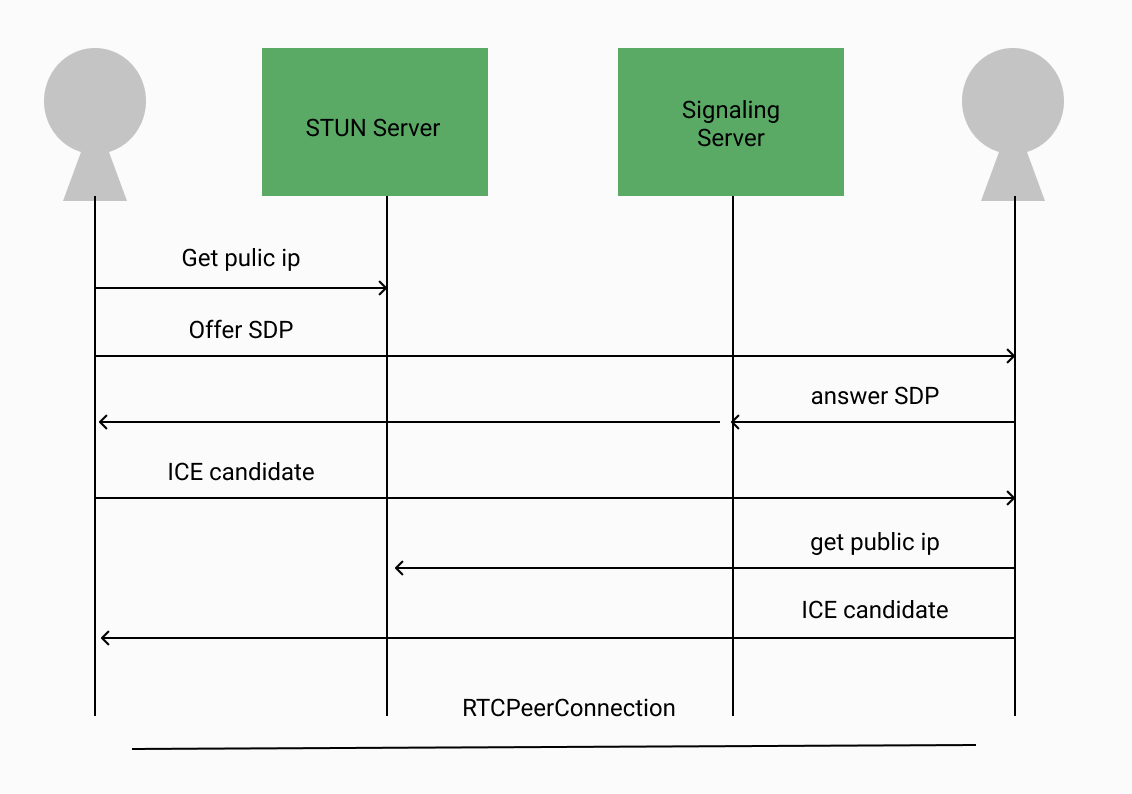

– Connecting to remote peers using NAT-traversal technologies such as ICE, STUN, and TURN

2. Conformance

3. Terminology

– The EventHandler interface, representing a callback used for event handlers, and the ErrorEvent interface are defined in HTML

4.Peer-to-peer connections

4.1.Introduction

– Communications are coordinated by the exchange of control messages (called a signaling protocol) over a signaling channel which is provided by unspecified means, but generally by a script in the page via the server, e.g. using XMLHttpRequest [xhr] or Web Sockets

4.2.Configuration

4.2.1 RTCConfiguration Dictionary

4.2.2 RTCIceCredentialType Enum

4.2.3 RTCIceServer Dictionary

{urls: 'stun:stun1.example.net'},

{urls: ['turns:turn.example.org', 'turn:turn.example.net'],

username: 'user',

credential: 'myPassword',

credentialType: 'password'},

4.2.4 RTCIceTransportPolicy Enum

4.2.5 RTCBundlePolicy Enum

4.2.6 RTCRtcpMuxPolicy Enum

4.2.7 Offer/Answer Options

4.3 State Definitions

4.3.1 RTCSignalingState Enum

Non-normative signalling state transitions diagram

– Caller transition:

new RTCPeerConnection(): stable

setLocalDescription(offer): have-local-offer

setRemoteDescription(pranswer): have-remote-pranswer

setRemoteDescription(answer): stable

– Callee transition:

new RTCPeerConnection(): stable

setRemoteDescription(offer): have-remote-offer

setLocalDescription(pranswer): have-local-pranswer

setLocalDescription(answer): stable

4.3.2 RTCIceGatheringState Enum

4.3.3 RTCPeerConnectionState Enum

4.3.4 RTCPeerConnectionState Enum

4.4 RTCPeerConnection Interface

– An RTCPeerConnection object has a signaling state, a connection state, an ICE gathering state, and an ICE connection state

4.4.1.1 Constructor

4.4.1.2 Chain an asynchronous operation

-> operations chainにアルゴリズムが全て書いてある

4.4.1.3 Update the connection state

4.4.1.4 Update the ICE gathering state

4.4.1.5 Set the RTCSessionDescription

4.4.1.6 Set the configuration

4.4.2 Interface Definition

– createOffer, createAnswer, setLocalDescription, setRemoteDescription, addIceCandidate, restartIce, getConfiguration, setConfiguration, close

4.4.3 Legacy Interface Extensions

4.4.3.1 Method extensions

4.4.3.2 Legacy configuration extensions

4.4.4 Garbage collection

4.5 Error Handling

4.6 Session Description Model

4.6.1 RTCSdpType

– offer, pranswer, answer, rollback

4.6.2 RTCSessionDescription Class

4.7 RTCSessionDescription Class

– This event is fired according to the state of the connection’s negotiation-needed flag

4.7.1 Setting Negotiation-Needed

4.7.2 Setting Negotiation-Needed

4.7.3 Updating the Negotiation-Needed flag

4.8 Interfaces for Connectivity Establishment

4.8.1 RTCIceCandidate Interface

4.8.1.1 candidate-attribute Grammar

4.8.1.2 RTCIceProtocol Enum

4.8.1.3 RTCIceTcpCandidateType Enum

4.8.1.4 RTCIceCandidateType

4.82 RTCPeerConnectionIceEvent

4.8.3 RTCPeerConnectionIceErrorEvent

4.9 Certificate Management

4.9.1 RTCCertificateExpiration Dictionary

4.9.2 RTCCertificate Interface

5. RTP Media API

5.1 RTCPeerConnection Interface Extensions

5.1.1 Processing Remote MediaStreamTracks

5.2 RTCRtpSender Interface

5.2.1 RTCRtpParameters

5.2.2 RTCRtpSendParameters

5.2.3 RTCRtpReceiveParameters

5.2.4 RTCRtpCodingParameters

5.2.5 RTCRtpDecodingParameters

5.2.6 RTCRtpEncodingParameters

5.2.7 RTCRtcpParameters

5.2.8 RTCRtpHeaderExtensionParameters

5.2.9 RTCRtpCodecParameters

5.2.10 RTCRtpCapabilities

5.2.11 RTCRtpCodecCapability

5.2.12 RTCRtpHeaderExtensionCapability

5.3 RTCRtpReceiver

5.4 RTCRtpTransceiver

5.5 RTCDtlsTransport

5.5.1 RTCDtlsFingerprint

5.6 RTCIceTransport

5.6.1 RTCIceParameters

5.6.2 RTCIceCandidatePair

5.6.3 RTCIceGathererState

5.6.4 RTCIceTransportState

5.6.5 RTCIceRole

5.6.6 RTCIceComponent

5.7 RTCTrackEvent

6.Peer-to-peer Data API

6.1 RTCPeerConnection Interface Extensions

6.1.1 RTCSctpTransport

6.1.1.1 Create an instance

6.1.1.2 Update max message size

6.1.1.3 Connected procedure

6.2 RTCDataChannel

6.2.1 Creating a data channel

6.2.2 Announcing a data channel as open

6.2.3 Announcing a data channel instance

6.2.4 Closing procedure

6.2.5 Announcing a data channel as closed

6.2.6 Error on creating data channels

6.2.7 Receiving messages on a data channel

6.3 RTCDataChannelEvent

6.4 Garbage Collection

7. Peer-to-peer DTMF

7.1 RTCRtpSender Interface Extensions

7.2 RTCDTMFSender

7.3 canInsertDTMF algorithm

7.4 RTCDTMFToneChangeEvent

8. Statistics Model

8.1 Introduction

8.2 RTCPeerConnection Interface Extensions

8.3 RTCStatsReport

8.4 RTCStats

8.5 The stats selection algorithm

8.6 Mandatory To Implement Stats

9. Media Stream API Extensions for Network Use

9.2 MediaStream

9.2.1 id

9.3 MediaStreamTrack

9.3.1 MediaTrackSupportedConstraints, MediaTrackCapabilities, MediaTrackConstraints and MediaTrackSettings

10. Examples and Call Flows

10.1 Simple Peer-to-peer Example

const signaling = new SignalingChannel(); // handles JSON.stringify/parse

const constraints = {audio: true, video: true};

const configuration = {iceServers: [{urls: 'stun:stun.example.org'}]};

const pc = new RTCPeerConnection(configuration);

// send any ice candidates to the other peer

pc.onicecandidate = ({candidate}) => signaling.send({candidate});

// let the "negotiationneeded" event trigger offer generation

pc.onnegotiationneeded = async () => {

try {

await pc.setLocalDescription(await pc.createOffer());

// send the offer to the other peer

signaling.send({desc: pc.localDescription});

} catch (err) {

console.error(err);

}

};

// once media for a remote track arrives, show it in the remote video element

pc.ontrack = (event) => {

// don't set srcObject again if it is already set.

if (remoteView.srcObject) return;

remoteView.srcObject = event.streams[0];

};

// call start() to initiate

async function start() {

try {

// get a local stream, show it in a self-view and add it to be sent

const stream = await navigator.mediaDevices.getUserMedia(constraints);

stream.getTracks().forEach((track) => pc.addTrack(track, stream));

selfView.srcObject = stream;

} catch (err) {

console.error(err);

}

}

signaling.onmessage = async ({desc, candidate}) => {

try {

if (desc) {

// if we get an offer, we need to reply with an answer

if (desc.type == 'offer') {

await pc.setRemoteDescription(desc);

const stream = await navigator.mediaDevices.getUserMedia(constraints);

stream.getTracks().forEach((track) => pc.addTrack(track, stream));

await pc.setLocalDescription(await pc.createAnswer());

signaling.send({desc: pc.localDescription});

} else if (desc.type == 'answer') {

await pc.setRemoteDescription(desc);

} else {

console.log('Unsupported SDP type. Your code may differ here.');

}

} else if (candidate) {

await pc.addIceCandidate(candidate);

}

} catch (err) {

console.error(err);

}

};

10.2 Advanced Peer-to-peer Example with Warm-up

const signaling = new SignalingChannel();

const configuration = {iceServers: [{urls: 'stun:stun.example.org'}]};

const audio = null;

const audioSendTrack = null;

const video = null;

const videoSendTrack = null;

const started = false;

let pc;

// Call warmup() to warm-up ICE, DTLS, and media, but not send media yet.

async function warmup(isAnswerer) {

pc = new RTCPeerConnection(configuration);

if (!isAnswerer) {

audio = pc.addTransceiver('audio');

video = pc.addTransceiver('video');

}

// send any ice candidates to the other peer

pc.onicecandidate = (event) => {

signaling.send(JSON.stringify({candidate: event.candidate}));

};

// let the "negotiationneeded" event trigger offer generation

pc.onnegotiationneeded = async () => {

try {

await pc.setLocalDescription(await pc.createOffer());

// send the offer to the other peer

signaling.send(JSON.stringify({desc: pc.localDescription}));

} catch (err) {

console.error(err);

}

};

// once media for the remote track arrives, show it in the remote video element

pc.ontrack = async (event) => {

try {

if (event.track.kind == 'audio') {

if (isAnswerer) {

audio = event.transceiver;

audio.direction = 'sendrecv';

if (started && audioSendTrack) {

await audio.sender.replaceTrack(audioSendTrack);

}

}

} else if (event.track.kind == 'video') {

if (isAnswerer) {

video = event.transceiver;

video.direction = 'sendrecv';

if (started && videoSendTrack) {

await video.sender.replaceTrack(videoSendTrack);

}

}

}

// don't set srcObject again if it is already set.

if (!remoteView.srcObject) {

remoteView.srcObject = new MediaStream();

}

remoteView.srcObject.addTrack(event.track);

} catch (err) {

console.error(err);

}

};

try {

// get a local stream, show it in a self-view and add it to be sent

const stream = await navigator.mediaDevices.getUserMedia({audio: true,

video: true});

selfView.srcObject = stream;

audioSendTrack = stream.getAudioTracks()[0];

if (started) {

await audio.sender.replaceTrack(audioSendTrack);

}

videoSendTrack = stream.getVideoTracks()[0];

if (started) {

await video.sender.replaceTrack(videoSendTrack);

}

} catch (err) {

console.error(err);

}

}

// Call start() to start sending media.

function start() {

started = true;

signaling.send(JSON.stringify({start: true}));

}

signaling.onmessage = async (event) => {

if (!pc) warmup(true);

try {

const message = JSON.parse(event.data);

if (message.desc) {

const desc = message.desc;

// if we get an offer, we need to reply with an answer

if (desc.type == 'offer') {

await pc.setRemoteDescription(desc);

await pc.setLocalDescription(await pc.createAnswer());

signaling.send(JSON.stringify({desc: pc.localDescription}));

} else {

await pc.setRemoteDescription(desc);

}

} else if (message.start) {

started = true;

if (audio && audioSendTrack) {

await audio.sender.replaceTrack(audioSendTrack);

}

if (video && videoSendTrack) {

await video.sender.replaceTrack(videoSendTrack);

}

} else {

await pc.addIceCandidate(message.candidate);

}

} catch (err) {

console.error(err);

}

};

10.3 Simulcast Example

10.4 Peer-to-peer Data Example

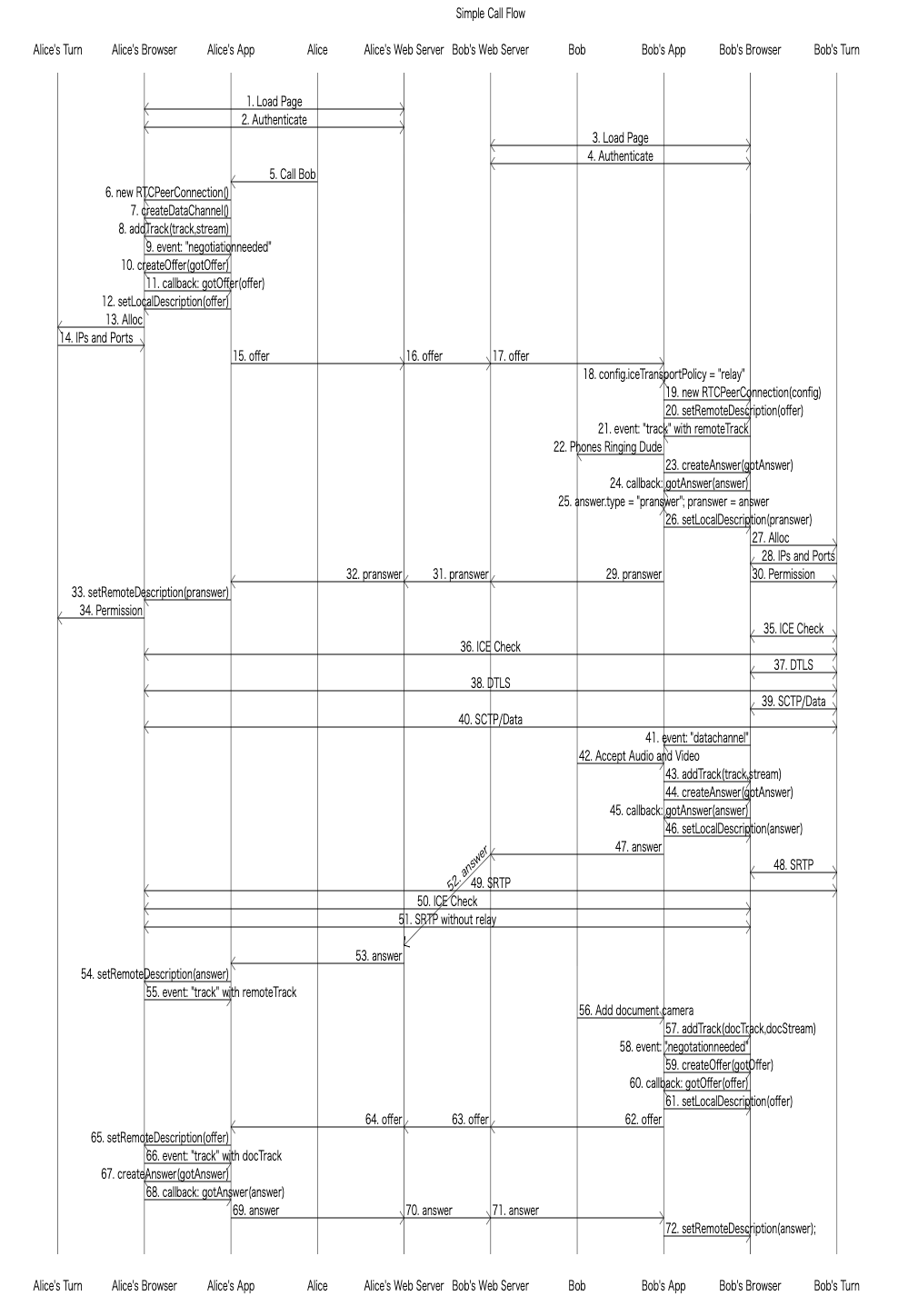

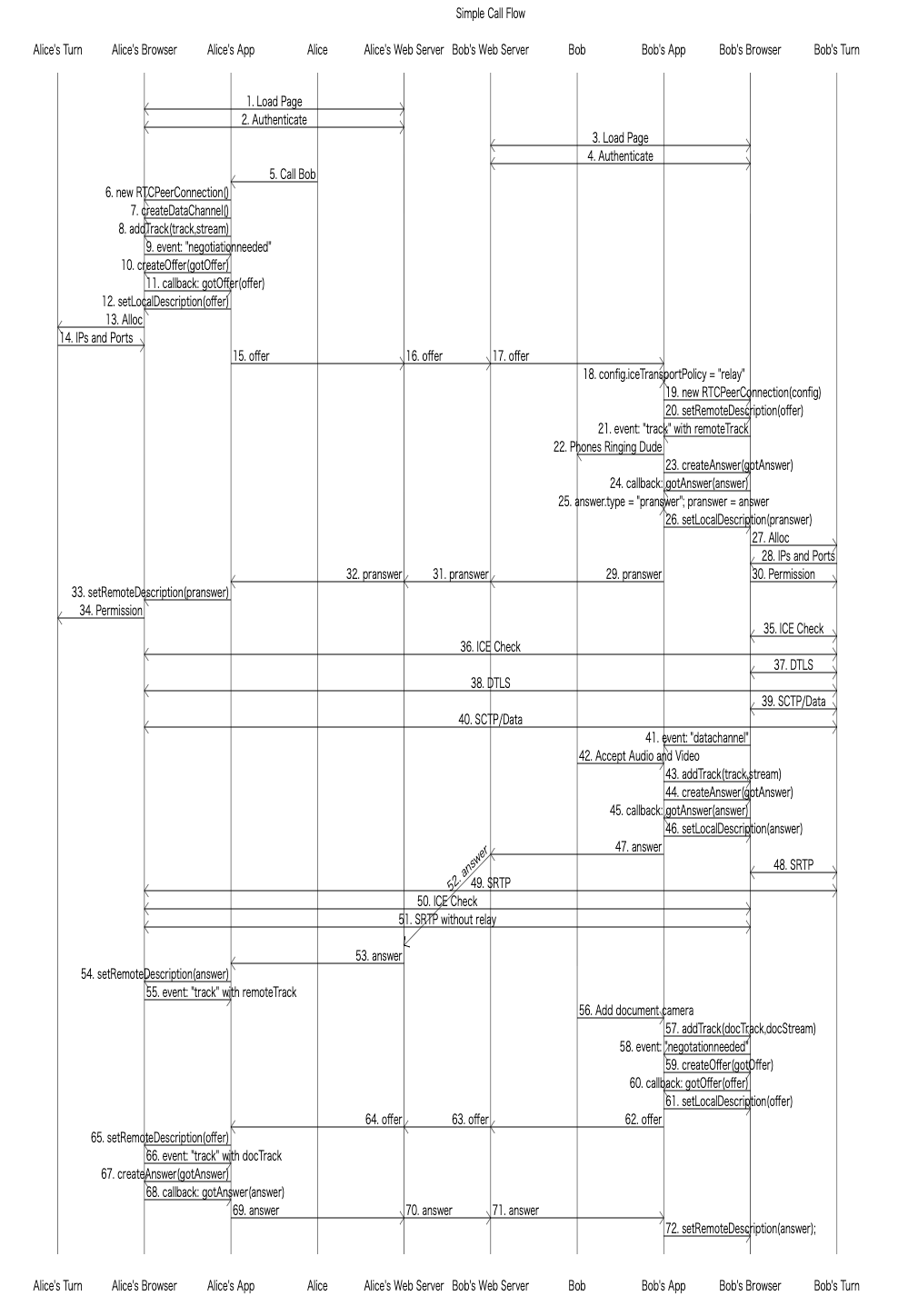

10.5 Call Flow Browser to Browser

10.6 DTMF Example

11. Error Handling

-> ICE CandidateはIPアドレス、プロトコル(TCP/UDP)だけでなく、ポート番号、コンポーネント、タイプ(host/srlfx/relay)、優先度(type preference, local preference, component IDなど)、ファウンデーション(ホールパンチ効率化)、ベース(無駄な候補を省く)も含まれる

-> 収集(IP&Portを集める)、交換(候補を交換)、整頓(相手の候補と自分の候補をペアにしてuniq & sort)、穴開(ペアに対して接続試行・ホールパンチ)、集結(候補を決定)

-> ローカル候補を取得(host)、STUNでNAT外部候補を取得(srlfx)、TurnにAllocation Request(relay)